In the first two posts in this series we've discussed the notion of single-gene causation. We don't mean the usual issues about genetic determinism

per se, which is often a discussion about deeper beliefs rather than a biological one. We are asking what it means biologically to say gene X

causes disease Y. Is it ever right to use such language? Is it closer to right in some cases than others?

As we've tried to show, even those cases that are considered 'single gene' causation usually aren't quite. A gene is a long string of nucleotides with regions of different (or no known) function. Many different variants of that gene can arise by mutation so either the CFTR nor BRCA1 gene as a whole actually causes cystic fibrosis or breast cancer. Instead, what seems to be meant in these cases is that the disease in someone carrying the variant has the disease

because (literally) of that variant. Of course, that implies that the rest of the gene is working fine, or at least is not responsible for the disease. That is, only the particular short deletion or nucleotide substitution causes the disease and no other factors are involved. Even in BRCA and cancer, the 'real' cause is the cellular changes that the BRCA1 variant allows to arise by mutation. Thus, if you think at all carefully about it, it's clear that we know that the idea that gene X causes disease Y is a fiction most if not all of the time, but this can just be splitting hairs, because there are clear instances that seem reasonable to count as 'single gene' causation. We'll come back to this.

But in this sense

everyone 'believes in' single-gene causation. But most of us would say that this is only when the case is clear....that is we believe in single-gene causation when there is single-gene causation, but not otherwise. That's a rather circular and empty concept. Better to say that everyone believes that single-gene causation applies sometimes.

More often these days, we know that there is not one nucleotide, nor even one single gene, that causes a trait by itself. There are many different factors that contribute to the trait. But here we get into trouble, unless we mean multiple univariate causation, in which there are

n causes circulating in the population, but each case arises because of only one of them, carried by the affected individual and the effect is due, in the usual deterministic sense, to that single factor in that person. Type 1 diabetes, nonsyndromic hearing loss, and the vision problem retinitis pigmentosa are at least partial examples. But this doesn't seem to be the general situation. Instead, what one means by multifactorial causation is that each of the 'causes' contributes some amount to risk, to the overall result, usually expressed as a risk or probability, of the outcome. This seems clearly unlike deterministic, billiard-ball causation, but it's not so clear that that's how people are thinking.

Here are some various relevant issues:

Penetrance

A commenter on our

first post in this series likened the idea of contributory or probabilistic causes to 'penetrance', a term that assigns a probability that a given genotype would be manifest as a given phenotype. That is, the likelihood of having the phenotype if you've got the causal variant. In response to that comment, we referred to the term as a 'fudge factor', and this is what we meant: The term was first used (as far as we remember) in Mendelian single-locus 'segregation' analysis, to test whether a trait (that is, its causal genotype) was 'dominant' or 'recessive' relative to causing qualitative traits like the presence or absence of a disease. The penetrance probability was one of the parameters in the model, and its value was estimated.

An important but unstated assumption was that the penetrance

was an inherent, fixed property of the genotype itself: wherever the genotype occurred in a family or population, its penetrance--chance of generating the trait--was the same. There was no specific biological basis for this (that is, a dominant allele that is only dominant sometimes!) and it was basically there to allow models to fit the data. For example, if even one Aa person didn't have the dominant (A-associated) trait, then the trait could simply not fit a fully dominant model, but there could be lots of reasons, including lab error, for such 'non-penetrance'.

A more modern approach to variable outcomes in individuals with the same genotype in question, which leads us to the issues we are discussing here and can be extended to quantitative traits like stature or blood pressure, is that the probability of an outcome, given that the carrier has a particular genotype, is not built into the genotype itself, but is

context dependent. That is what is implicitly assumed to give the genotype its probabilistic causal nature. An important part of that context is the rest of the person's genome.

The retrospective/prospective epistemic problem

Deterministic causation is easy: you have the cause, you have its effect. Probabilistic causation is elusive, and what people mean is first, that they estimated a fractional excess of given outcomes in carriers of the 'cause' compared to non-carriers (e.g., a genotype at a particular site in the genome). This is based on a sample, with all the (usually unstated) issues that implies, but is expressed as if there were a simple dice-roll: if you have the genotype the dice are loaded in one way, compared to the dice rolled for those without the genotype. Again, as in penetrance, although one would routinely say this is context-dependent, each genetic 'effect size' is viewed essentially as inherent to the genotype--the same for everyone with the genotype. If you do this kind of work you may object to what we just said, but it is a major implicit justification for increased sample size, meta-analysis and other strategies.

The unspoken thinking is subtle, and built into the analysis, but essentially invokes context-dependence with the escape valve of the

ceteris parabus (all else being equal) explanation: the probability of the trait that we estimate to be associated with this particular test variant is net result of outcomes averaged over all the other causes that may apply; that is, averaged over all other contexts. As we'll see below, we think this is very important to be aware of.

But there's another fact: when it comes to such other factors, our data are

retrospective: we see outcomes after people with, and without, the genotype have been exposed to all the 'other' factors, even if we don't know or haven't measured them, that applied in the past--to the people we've sampled in our study, to their range of contexts. But the risk, or probability, we associate with the genotype is by its very nature

prospective. That is, we want to use it to

predict outcomes in the future, so we can prevent disease, etc. But that risk depends on the genotype bearers' future exposures, and we have literally no way, not even in principle, to know what those will be, even if we have identified all the relevant factors, which we rarely have.

In essence, such predictions assume no behavior changes, no new exposures to environmental contaminants and the like, and no new mutations in the genome. This is a fiction to an unknowable extent! The risks we are giving people are extrapolations from the past. We do this whether we know that's what we're doing or not; with current approaches that's what we're stuck with and we have to hope it's good enough. Yet we know better! We have countless precedents that environmental changes have

massive effects on risk. E.g., the risk of breast cancer for women with a BRCA mutation varies by birth cohort, with risk increasing over time, as shown by Mary-Claire King et al. in a Science

paper in 2003. Our personalized genomic probabilities are actually often quite ephemeral.

The problem of unidentified risk factors and additive models

Similarly, we know that we are averaging over all past exposures in estimating genotype-specific risks. We know that much or even most of that consists of unidentified or unmeasured factors. That makes future projection even more problematic. And the more factors, the more

ceteris paribus simply doesn't apply, not just in some formal sense, but in the important literal sense: we just are not seeing the given test variant at a given spot in the genome enough times, that is, against all applicable background exposures, to have any way to know how well-represented the unmeasured factors are.

The problem of unique alleles and non-'significant' causes

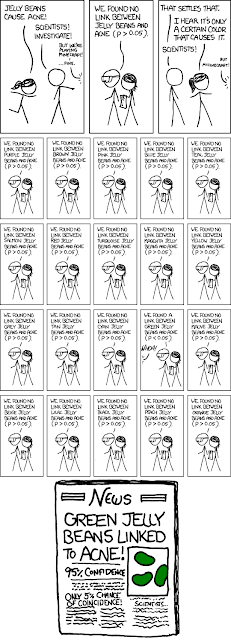

A lot of our knowledge about genetic causation in the human sense is derived from large statistical survey samples, such as genomewide mapping studies. Because so many individual genomic variant sites (often now in the millions, sometimes now the billions) are being tested, we know that by chance we may see more frequent copies of a given variant in cases than we see in controls. Only some of the time would the variant be truly causal (that is, in some mechanistic sense), because we know that in sampling unequal distribution arises just by chance.

In order not to be swamped by such false positive signals, we typically are forced to use very conservative statistical significance tests. That essentially means we intentionally overlook huge numbers of genetic variants that may mechanistically, in fact, contribute to the trait, but only to a small extent. This is a practical matter, but unless we have mechanistic information we currently have no way to deal with things too rare, no matter that they may be the bulk of causation in the genome that we are trying to understand (the evidence for this is seen, for example, in the 'missing' heritability, that mapping studies can't account for). So, for practical reasons, we essentially define rare small effects not to be real, even if overall they constitute the bulk of genomic causation!

Many genetic variants are unique in the population, or at least so rare that they occur only in one person or, perhaps, a few of the person's very close relatives--or never even in huge mapping samples, even if they occur in the clinic or among people who seek 'personalized genomic' advice. Yet if some single-gene causal variants exist--as we've seen--there is no reason not to think that the same variants with essentially single-site causal effects, arising as new or very recent mutations, must be very numerous.

A unique variant found in a case might suggest 100%

causation--after all, you see it only once but in an affected person,

don't you? Likewise, if in an unaffected, you might attribute 100%

protection to it. Clearly these are unreliable if not downright false

inferences. Yet most variants in this world are like that--unique, or

at least very rare. So we are faced with a real epistemic problem.

Such variants are very hard to detect if probabilistic evidence is our conceptual method, if probabilistic evidence relies, as significance tests do, on replication of the observation. Some argue that what we must do is use wholegenome sequence in families or other special designs, to see if we can find them by looking for various kinds of, again, replicated observation. Time will tell how that turns out.

The intractability of non-additive effects (interactions), even though they must generally occur

We usually use additive models when considering multiple risk factors. Each factor's net effect, as seen in our sample is estimated using the ceteris paribus assumption that we're seeing it against all possible backgrounds, and we estimate an individual's overall risk as a combination, such as the sum of these independently estimated effects. But they may interact--indeed, it is in the nature of life that components are cooperative (in the MT sense; co-operative), and they work only

because they interact.

Unfortunately, it is simply impossible to do an adequate job of accounting for interactions among many risk factors. If there are, say, 100 contributing genes (most complex trait involve hundreds, it appears), then there are (100 X 99)/2=4950 possible interactions just counting those that involve only two factors. And interactions need not be merely multiplicative. Factor A squared times Factor B may be the way things work. Worse, usually many, often tens, of gene products interact to bring about a function such as to alter gene expression.

We simply have no way to estimate or understand such an open-ended set of interactions. So our 'probabilistic' causation notions of multiple causes is simply inaccurate, but to an unknown if not unknowable extent. Yet we feel these factors really

are, in some sense, causative. This is not just a practical problem that should plague genotype-based risk pronouncements (and, here, we're ignoring environmental factors to which the same things apply), but is a much deeper issue in the nature of knowledge. We should be addressing it.

What about additive effects--does the concept even make sense? Genes almost always work by interaction with other genes (that is, their coded proteins physically interact). So, suppose the protein coded by G1 binds to that coded by G2 to cause, say, the expression of some other gene. Variants in G1 or G2 might generally add their effects to the resulting outcome, say, the level of gene expression which, if quantitative can raise the probability of some other outcome, like blood pressure or the occurrence of diabetes. So the panoply of sequence variants in G1 and similarly G2, would generate a huge number of combinations, and this might seem to generate some smooth distribution of the resulting effect. It seems like a fine way to look at things, and in a sense it's the usual way. But suppose a mutation makes G2 inactive; then G1 cannot have its additive

effect--it can't have

any effect. There is, one could say, a singularity in the effect distribution. This simple hypothetical shows why additive models are not just abstract, but must be inaccurate to an unknowable extent.

The concept of a causal vector: pseudo-single gene causation thinking?

Now, suppose we identify a set of some number, say

n, of causal factors each having some series of possible states (like a spot in the genome with two states, say, T and C) in the population. From this causal pool, each person draws one instance of each of the

n factors. Whether this is done 'randomly' or in some other way is important....but largely unknown.

This set of draws for each person can be considered his/her 'vector' of risks: [s1, s2, s3.....s

n]. Each person has such a vector and we can assemble them into a matrix or list of the vectors in the actual population, the possible population of draws that could in principle occur, or the set we have captured in our study sample. Associated with this vector, each person also has his/her outcome for a trait we are studying, like diabetes or stature. Overall, from this matrix we can estimate the distribution of resulting outcome values (fraction 'affected' or average blood pressure and its amount of variation). We can look at the averages for each independent factor--this is what studies do typically). We can thus estimate the factor-specific risk, and so on,

as represented in the sample we have.

However, no sample or even any whole population can include all possible risk vectors. If there were only 100 genetic sites (rather than the common GWAS finding of hundreds or thousands), each with 3 states, any person can have (AA, Aa, aa), there would be 3 to the hundredth power (5 x 10 to the 47th) possible genotypes! Each person genotype is unique in human history. Our actual observations are only a miniscule sampling--and next generation's (whose risk we purport to predict) will be different from our generation's. The

ceteris paribus assumption of multivariate statistical analysis that justifies much of the analysis is basically that this totally trivial sample captures the essential features of this enormous possible background variation.

Each person's risk vector is unique, and we don't observe nearly all possible vectors (even that could be associated with our trait). But since we know that each person is unique, how can we treat their risk probabilistically? As we've tried to describe, we assign a probablity, and its associated variation among people, with each risk factor essentially as the set of those risk vectors that are in the 'affected' category. But that has nothing to do with whether the individual factor whose risk we're providing to people is deterministic, once you know the rest of the person's vector, or is

itself acting probabilistically.

If the probabilistic nature of our interpretation means that for your state at the test site, over all the backgrounds you might have and that we've captured in our studies, this fraction of the time you would end up with the trait, then that is actually an assumption that the

risk vector is wholly deterministic. Or that any residual probabilism is due to unmeasured factors.

Surprisingly, while this is not exactly invoking single-gene causation, it's very similar thinking. It uses other people's exposure and results to tell us what your results will be, and is in a way at the heart of reductionist approaches that assume individual replicability of causal factors. Your net risk is essentially the fraction of people with your exact risk vector who would have the test outcome. The only little problem with that is that there is

nobody else with the same set of risk factors!

It may be that nobody believes in single-gene causation literally, but in practice what we're doing is to some extent effectively, conceptually, and perhaps even logically similar. It is a way of hoping for fixed, billiard-ball (or, at least, highly specific distributional) causation: given the causal vector, the outcome

will occur. In the same way that single-gene causation isn't really single genes (i.e., a mutation in a specific nucleotide in the CFTR gene is treated causally as if it were the whole gene, even though we know that's not true), we tend now to treat a vector of causes, or more accurately perhaps each person's

genome as a deterministic genetic cause. The genome replaces the gene, in single-cause thinking. That, in essence, is what 'personalized genomic prediction' really is promising.

Essentially, the doubt--the probabilistic statement of risks--arises only if we consider each factor independently. After going through the effort to genotype on a massive scale, the resulting risk if we tried to estimate the range of outcomes consistent with the estimated probability (the variance around the point estimate), would in most cases be of very little use, since what each of us cares about is not the average risk, but our own specific risk. There are those causal factors that are so strong that these philosophical issues don't matter and our predictions are, if not perfect, adequate for making life and death decisions about genetic counseling, early screening, and so on. But that is the minority of cases that addicts us to the approach.

This is to us a subtle but slippery way of viewing causation that, because it is clothed in multi-causal probabilistic rhetoric seems to be conceptually different from an old-fashioned kind of single-gene causation view, but that leads us to avoid facing what we know are the real, underlying problems in our notions of epidemiological and genetic causation. It's a way of justifying business as usual, that scaling up will finally solve the problem....and avoiding the intimidating task of thinking more deeply about the problem if, or when, more holistic effects in individuals, not those averaged over all individuals, are at work.

What we have discussed are basically aspects of the use of multiple variable statistics, not anything we have dreamed up out of nowhere. But they are relevant, we believe, to a better understanding of what may be called the emergent traits of biological creatures, relative to their inherited genomes.

Let's think again about genetic determinism!

On the other hand, we actually

do in fact have rather clear evidence that genetic determinism, or perhaps genomic determinism, might be true after all. Not only that, we have the kind of repeatable observations of unique genotypes, that you want to have if you accept statistical epistemology! And we have such evidence in abundance.

But this is for next time.