The direct-to-consumer DNA genotyping company, 23andMe, announced on Monday that it was soon to receive its first patent, one that it had applied for in 2010 (that patent application is here). It has to do with genetic polymorphisms associated with Parkinson's disease (PD), and, while we're not patent lawyers by any means, the application seems to cover genetic variants and testing. This announcement is a surprise to many, including many of its customers (indeed, the consent form says nothing about possible patents), in part because the company has come out against gene patenting in the past.

Ken and I have had issues all along with the scientific merit of what these companies are selling (see this post and this one, e.g.). And we're by far not the only ones. Much of what they report back to consumers are things the consumers already know (e.g., hair color, freckles), and the estimates of most disease risk, which they also report, just aren't ready for prime time for all sorts of reasons that we and others have commented amply upon. So, while it may be fun for people to know their genotypes, and sometimes their ancestry estimates, the information is generally not very useful clinically. And apparently it's not just for public edification or recreation: it is run by Google money, after all. We believe it's unethical for any of these companies to be selling disease risk, no matter how many caveats they include, and this is before considering the profit motive in patenting what they have found apparently on the backs of their customers.

How does 23andMe work? You spit in a tube and send it to the company along with your payment, and they genotype you at selected sites and then give you full access to that data, with some explanation. If you want to take part in the research the company does then you can choose to answer surveys about lifestyle or other risk factors, which is your consent for them to throw that information and your genetic data into their research pool. The company says that most of their customers have chosen to do this, but at the same time, it seems that none of their customers were told that gene patents might result.

Gene patenting has been controversial since the first one was applied for, in part because it is difficult to know how to apply the generic wording on patents in the Constitution to the molecular age. Basically, patents should cover inventions or discoveries, and while scientists may in fact discover genes, the law now says that they can only be patented if the scientist has also discovered a function for the gene. That's a law that had to have been written by not-disinterested geneticists! Legally speaking, the polymorphisms discovered by 23andMe for PD are patentable, but is that really innovation?

But legal or not, many, including us, are still not in favor of gene patenting. To us, patenting should be not getting to own properties of nature, but for innovating value-added products. A medical test, that any beginner who works in a genetics lab for a week could replicate, shouldn't be covered. If the patents were designed to protect against commercialization of naturally occurring genotypes, as NIH once threatened to do, that's a worthy public-domain protectant--against predatory commercial practices--but that's not the idea here.

The gene patenting issue has been endlessly discussed and the discussion is easily accessed online, so we won't repeat the arguments here except to say that our personal concerns are that patenting makes public and often publicly funded information private, for private personal gain. In addition, it can tie the hands of clinicians who want to be able to offer genetic testing to their patients, it can prohibit others from doing research on a gene of interest and so on, and while we may be a minority we're not in favor of the get rich quick motivation for doing research. Some "defensive patenting" has been going on (patenting to protect against genetic profiteering), but in our opinion that should not be necessary -- naturally occurring genes should not be patentable, period.

As if the issue of this 23andMe patent weren't problematic enough, it seems that the company's motivation for focusing on Parkinson's disease is a very personal one. Apparently the CEO's husband, one of the founders of Google, has been found to have a PD risk allele, and the couple has donated millions of dollars to the Michael J Fox Foundation (which will be one of the beneficiaries of this patent, along with Scripps Research Institute) for research. Well, fine, but they should have been up front about their personal interest in mining the 23andMe consumer database for PD variants and asked their customers whether they were willing to

donate spit, and pay for what turned out to be a PD research 'crowd-funding' project (see our post last week on this idea as related to fairer science funding). Instead, they appear to have done it surreptitiously, complicating what's already making many 23andMe customers feel misled, if not betrayed (see comments on Twitter for examples--here's a randomly chosen set of them, e.g.). Not to mention that some of the scientists affiliated with this company have had prior apparent interests in ethics, to boot.

Thursday, May 31, 2012

Wednesday, May 30, 2012

Magical science: now you see it, now you don't. Part II: How real is 'risk'?

By

Ken Weiss

Why is it that after countless studies, we don't know whether to believe the latest hot-off-the-press pronouncement of risk factors, genetic or environmental, for disease, or of assertions about the fitness history of a given genotype? Or in social and behavioral science....almost anything! Why are scientific studies, if they really are science, so often not replicated when the core tenet of science is that causes determine outcomes? Why should we have to have so many studies of the same thing, even decade after decade? Why do we still fund more studies of the same thing? Is there ever a time when we say Enough!?

That time hasn't come yet, and partly that's because professors have to have new studies to keep our grants and our jobs, and we do what we know how to do. But there are deeper reasons, without obvious answers, and they're important to you if you care about what science is, or what it should be--or what you should be paying for.

Last Thursday, we discussed some aspects of the problem when a set of causes that we suspect work only by affecting the probability of an outcome we're interested in. The cause may truly be deterministic, but we just don't understand it well enough, so must view its effect in probability terms. That means we have to study a sample, of repeated individuals exposed to the risk factor we're interested in, in the same way you have to flip a coin many times to see if it's really fair--if its probability of coming up Heads is really 50%. You can't just look at the coin or flip it once.

Nowadays, reports are often of meta-analysis, in which, because it is believed that no single study is definitive (i.e., reliable), we pool them and analyze the lot, that is, the net result of many studies, to achieve adequate sample sizes to see what risk really is associated with the risk factor. It should be a warning in itself that the samples of many studies (funded because they claimed and reviewers expected them to be adequate to the task) are now viewed as hopelessly inadequate. Maybe it's a warning that the supposed causes are weak to begin with--too weak for this kind of approach to be very meaningful?

Why, among countless examples, after having done many studies don't we know if HDL cholesterol does or doesn't protect from heart disease, or antioxidants from cancer, or coffee is a risk factor, or obesity is, or how to teach language or math, or avoid misbehavior of students, or whether criminality is genetic (or is a 'disease'), and so on--so many countless examples from the daily news, and you are paying for this, study after study without conclusive results, every day!

There are several reasons. These are serious issues, worthy of the attention of anyone who actually cares about understanding truth and the world we live in, and its evolution. The results are important to our society as well as to our basic understanding of the world.

So, then, why are so many results not replicable?

Here are at least some reasons to consider:

This situation--and our list is surely not exhaustive--is typical and pervasive in observational rather than experimental science. (In the same kinds of problems, lists just as long exist to explain why some areas even of experimental science don't do much better!)

A recent Times commentary and post of ours discussed these issues. The commentary says that we need to make social science more like experimental physical science with better replications and study designs and the like. But that may be wrong advice. It may simply lead us down an endless, expensive path that simply fails to recognize the problem. Social sciences already consider themselves to be real science. And presenting peer-reviewed work that way, they've got their fingers as deeply entrenched into the funding pot as, say genetics does.

Whether coffee is a risk factor for disease, or certain behaviors or diseases are genetically determined, or why some trait has evolved in our ancestry...these are all legitimate questions whose non-answers show that there may be something deeply wrong without current methods and ideas about science. We regularly comment on the problem. But there seems to be no real sense that there's an issue being recognized, in opposition to the forces that pressure scientists to continue business as usual---which means that we continue to do more and more and more-expensive studies of the same things.

One highly defensible solution would be to cut support for such non-productive science until people figure out a better way to view the world, and/or that we require scientists to be accountable for their results. No more, "I write the significance section of my grants with my fingers crossed behind my back" because I know that I'm not telling the truth (and the reviewers, who do the same themselves, know that you are doing that).

As it is, resources go to more and more and more studies of the same that yield basically little, students flock to large university departments that teach them how to do it, too, journals and funders make their careers reporting their research results, and policy makers follow the advice. Every day on almost any topic you will see in the news "studies show that....."

This is no secret: we all know the areas in which the advice goes little if anywhere. But politically, we haven't got the nerve to make such cuts and in a sense we would be lost if we had nobody assessing these issues. What to do is not an easy call, even if there were the societal will to act.

That time hasn't come yet, and partly that's because professors have to have new studies to keep our grants and our jobs, and we do what we know how to do. But there are deeper reasons, without obvious answers, and they're important to you if you care about what science is, or what it should be--or what you should be paying for.

Last Thursday, we discussed some aspects of the problem when a set of causes that we suspect work only by affecting the probability of an outcome we're interested in. The cause may truly be deterministic, but we just don't understand it well enough, so must view its effect in probability terms. That means we have to study a sample, of repeated individuals exposed to the risk factor we're interested in, in the same way you have to flip a coin many times to see if it's really fair--if its probability of coming up Heads is really 50%. You can't just look at the coin or flip it once.

Nowadays, reports are often of meta-analysis, in which, because it is believed that no single study is definitive (i.e., reliable), we pool them and analyze the lot, that is, the net result of many studies, to achieve adequate sample sizes to see what risk really is associated with the risk factor. It should be a warning in itself that the samples of many studies (funded because they claimed and reviewers expected them to be adequate to the task) are now viewed as hopelessly inadequate. Maybe it's a warning that the supposed causes are weak to begin with--too weak for this kind of approach to be very meaningful?

Why, among countless examples, after having done many studies don't we know if HDL cholesterol does or doesn't protect from heart disease, or antioxidants from cancer, or coffee is a risk factor, or obesity is, or how to teach language or math, or avoid misbehavior of students, or whether criminality is genetic (or is a 'disease'), and so on--so many countless examples from the daily news, and you are paying for this, study after study without conclusive results, every day!

There are several reasons. These are serious issues, worthy of the attention of anyone who actually cares about understanding truth and the world we live in, and its evolution. The results are important to our society as well as to our basic understanding of the world.

So, then, why are so many results not replicable?

Here are at least some reasons to consider:

1. If no one study is trustworthy, why on earth would pooling them be?Overall, when this is the situation, the risk factor is simply not a major one!

2. We are not defining the trait of interest accurately

3. We are always changing the definition of the trait or how we determine its presence or absence

4. We are not measuring the trait accurately

5. We have not identified the relevant causal risk factors

6. We have not measured the relevant risk factors accurately

7. The definition of the risk factors is changing or vague

8. The individual studies are each accurate, and our understanding of risk is in error

9. Some of the studies being pooled are inaccurate

10. The first study or two that indicated risk were biased (see our post on replication), and should be removed from meta-analysis....and if that were done the supposed risk factor would have little or no risk.

11. The risk factor's effects depend on its context: it is not a risk all by itself

12. The risk factor just doesn't have an inherent causal effect: our model or ideas are simply wrong

13. The context is always changing, so the idea of a stable risk is simply wrong

14. We have not really collected samples that are adequate for assessing risk (they may not be representative of the population at-risk)

15. We have not collected large enough samples to see the risk through the fog of measurement error and multiple contributing factors

16. Our statistical models of probability and sampling are not adequate or are inappropriate for the task at hand (usually, the models are far too simplified, so that at best they can be expected only to generate an approximate assessment of things)

17. Our statistical criteria ('significance level') are subjective but we are trying to understand an objective world

18. Some causes that are really operating are beyond what we know or are able to measure or observe (e.g., past natural selection events)

19. Negative results are rarely published, and so meta-analyses cannot include them, so a true measure of risk is unattainable

20. The outcome has numerous possible causes; each study picks up a unique, real one (familial genetic diseases, say), but it won't be replicable in another population (or family) with a different cause that is just as real

21. Population-based studies can never in fact be replicated because you can never study the same population--same people, same age, same environmental exposures--at the same time, again

22. The effect of risk factors can be so small--but real--that it is swamped by confounding, unmeasured variables.

This situation--and our list is surely not exhaustive--is typical and pervasive in observational rather than experimental science. (In the same kinds of problems, lists just as long exist to explain why some areas even of experimental science don't do much better!)

A recent Times commentary and post of ours discussed these issues. The commentary says that we need to make social science more like experimental physical science with better replications and study designs and the like. But that may be wrong advice. It may simply lead us down an endless, expensive path that simply fails to recognize the problem. Social sciences already consider themselves to be real science. And presenting peer-reviewed work that way, they've got their fingers as deeply entrenched into the funding pot as, say genetics does.

Whether coffee is a risk factor for disease, or certain behaviors or diseases are genetically determined, or why some trait has evolved in our ancestry...these are all legitimate questions whose non-answers show that there may be something deeply wrong without current methods and ideas about science. We regularly comment on the problem. But there seems to be no real sense that there's an issue being recognized, in opposition to the forces that pressure scientists to continue business as usual---which means that we continue to do more and more and more-expensive studies of the same things.

One highly defensible solution would be to cut support for such non-productive science until people figure out a better way to view the world, and/or that we require scientists to be accountable for their results. No more, "I write the significance section of my grants with my fingers crossed behind my back" because I know that I'm not telling the truth (and the reviewers, who do the same themselves, know that you are doing that).

As it is, resources go to more and more and more studies of the same that yield basically little, students flock to large university departments that teach them how to do it, too, journals and funders make their careers reporting their research results, and policy makers follow the advice. Every day on almost any topic you will see in the news "studies show that....."

This is no secret: we all know the areas in which the advice goes little if anywhere. But politically, we haven't got the nerve to make such cuts and in a sense we would be lost if we had nobody assessing these issues. What to do is not an easy call, even if there were the societal will to act.

Tuesday, May 29, 2012

More nature, less supernature: Results of a new biological anthropology curriculum

|

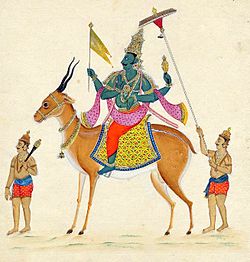

| Wind God (Tokyo National Museum) |

Number one. I rearranged everything relative to all the mainstream textbooks. That includes teaching evolution (common ancestry, deep time), then inheritance, variation, and mutation, then genetic drift and gene flow and THEN natural selection.

Number two. I actively teach creation alongside evolution and am ashamed that it took me this long to do it (but won't beat myself up too much about it considering all the political pressure to keep it out of science classes, which I'm sympathetic to but no longer agree with entirely. For more read here.)

Number three. Students voluntarily spat for 23andMe and were able to see thousands of their SNPs that were genotyped for free and also heard excellent guest speakers on genetics and genetic testing. (All thanks to a teaching grant I received from my university.)

Students who take this course are mostly freshmen and sophomores (18-20 years old) who are undecided majors or non-majors. It serves as a "general education" credit in the natural sciences that fulfills graduation requirements for nearly all majors on campus. The course is required for any anthropology majors and minors in the class, and future ones.

I pre- and post-tested them on science and evolution issues--using the same questions* I asked of students in the Fall 2011 course where I did not implement this curriculum. I also surveyed the students at the end of the semester about the 23andMe experience (and I may report the results of that survey in a later post).

Based on those pre- (n = 82) and post- (n = 85) tests, here's a bit of what I learned about what they learned during Spring 2012, either inside or alongside my course, during those three or so months of their lives. Percentages are presented as pre...post %.**

|

| Wind God, Ehecatl, an avatar of Quetzalcoatl (en.wikipedia.org) |

Result #1: Greater understanding of, and confidence about, evolution and science

Showed improvement.

From already correct majority to even larger correct majority. Listed from least to most improvement.

- There is lots of evidence against evolution. 78...81% disagree

- If two light-skinned people moved to Hawaii and got very tan their children would be born more tan than they (the parents) were originally. 85...88% disagree

- Humans and chimpanzees evolved separately from an ape-like ancestor. 70...73% agree

- Dinosaurs and humans lived at the same time in the past. 83...87% disagree

- A species evolves because individuals want to. 83...88% disagree

- Variation among individuals within a species is important for evolution. 84...92% agree

- You cannot prove evolution happened. 70...79% disagree

- A scientific theory is a set of hypotheses that have been tested repeatedly and have not been rejected. 78...88% agree

- The theory of evolution correctly explains the development of life. 77...88% agree

- Evolution is always an improvement. 54...72% disagree

- Evolution cannot work because one mutation cannot cause a complex structure (e.g., the eye). 62...88% disagree

From incorrect majority to correct majority.

- New traits within a population appear at random. 59% disagree...55% agree

- A species evolves because individuals need to. 73% agree... 49% disagree

- “Survival of the fittest” means basically that “only the strong survive.” 65% agree...72% disagree

For comparison...Fall 2011 students (remember, they did not have the three curricular changes; n= 70) improved on only 10 of the 14 questions above. Here are the four exceptions where they did not improve (and instead got worse!):

- Dinosaurs and humans lived at the same time in the past.

- If two light-skinned people moved to Hawaii and got very tan their children would be born more tan than they (the parents) were originally.

- A species evolves because individuals need to.

- “Survival of the fittest” means basically that “only the strong survive”.

Showed improvement, but still WRONG.

Fewer students chose the wrong answer and by extension more students chose the correct answer. None of these questions showed improvement by Fall 2011 students.

- The environment determines which new traits will appear in a population. 80...76% agree (should disagree)

- All individuals in a population of ducks living on a pond have webbed feet. The pond completely dries up. Over time, the descendants of the ducks will evolve so that they do not have webbed feet. 69...59% agree (should disagree)

- If webbed feet are being selected for, all individuals in the next generation will have more webbing on their feet than individuals in their parents’ generation. 59...51% agree (should disagree)

Fewer chose the correct answer. All of these also got worse for 2011 students.

- Small population size has little or no effect on the evolution of a species. 79...78% disagree

- If two distinct populations within the same species begin to breed together this will influence the evolution of that species. 88...83% agree

- A scientific theory that explains a natural phenomenon can be defined as a “best guess.” 45% disagree...53% agree

Huh?

88% agree with: A scientific theory is a set of hypotheses that have been tested repeatedly and have not been rejected.

Okay, great, but 53% also agree with: A scientific theory that explains a natural phenomenon can be defined as a “best guess.”

Stayed the same. Also stayed the same in 2011.

Two of the most important factors that determine the direction of evolution are survival and reproduction. 94...94% agree

On confidence...

I have a clear understanding of the meaning of scientific study. 76...89% agree

I have a clear understanding of the term “fitness” when it is used in a biological sense. 58...88% agree

Stayed the same. Also stayed the same in 2011.

Two of the most important factors that determine the direction of evolution are survival and reproduction. 94...94% agree

I have a clear understanding of the meaning of scientific study. 76...89% agree

I have a clear understanding of the term “fitness” when it is used in a biological sense. 58...88% agree

Comments

In terms of some fundamentals, undergrads seem to have a decent handle on things coming into my class but there's still a lot of room for improvement. It's frustrating how some of these that show improvement only to 73% (like question 8). I mean, roughly 95% get question 8 right on the exam but with different wording. To get to the bottom of it, I think I'll talk through #8 explicitly with students. I do think that in many instances semantics are muddying the results. Unfortunately I can't change the wording if I want to keep comparing results to prior semesters (and to the results published in the source article*).

Nevertheless, it's clear that 2012's students outperformed 2011's by improving on more questions (both the ones the majority already knew and the ones that the majority still does not know but is getting there!). Next time I'm going to need to better cover "theory," population size effects, gene flow effects, and general population variation over the generations. Or I can continue, like I do, to go further in depth with population genetics in the upper level courses, since I can't expect students to learn everything in one semester. I didn't after all. Still haven't!

|

| Wind God, Vayu (en.wikipedia.org) |

Result #2: No need of that hypothesis

Let's compare the responses between 2011 (remember, no rearranged presentation, no teaching creation, and no 23andMe) and 2012 with their pre...post %.

Fall 2011

Agree: 26...25%

Disagree: 57...58%

No opinion/undecided: 17...17%

Spring 2012

Disagree: 57...72%

No opinion/undecided: 17...13%

Comments

That's an increase of 15% of students in my course who disagree, which is to report that they have no need of the god hypothesis for human evolution and no need for human exceptionalism.***

That degree of change is roughly on par with the improvement seen here (from above):

- The theory of evolution correctly explains the development of life. 77...88% agree

- Evolution is always an improvement. 54...72% disagree

- Evolution cannot work because one mutation cannot cause a complex structure (e.g., the eye). 62...88% disagree

- A species evolves because individuals need to. 73% agree... 49% disagree

- “Survival of the fittest” means basically that “only the strong survive.” 65% agree...72% disagree

- I have a clear understanding of the meaning of scientific study. 76...89% agree

- I have a clear understanding of the term “fitness” when it is used in a biological sense. 58...88% agree

Even if stats enable me to predict improvements with letting go of supernature, I'm not so sure that it will be clear that this change was caused by my course and new curriculum. For me, my loss of the supernatural was so gradual that I can't credit one course! I just kind of sloughed off the supernatural over time. So it's quite possible that these results don't reflect a change in many of those students, as much as their new found willingness to admit that a change is occurring or has occurred. Still, that I tried out this new curriculum... it's hard not to assume causation from correlation. (But like I said, I have to do the stats if I'm to see about that.)

I'm not going to lie... I'm so proud of my students for how they answered this one question. And I'm not going to pretend this isn't dangerous information that our friends and heroes who battle creationist politicians might not like to share with those creationist politicians!

The fear of losing the god hypothesis is a major reason why creationists don't want evolution to be the only explanation taught in K-12 biology classrooms (or don't want it taught at all). And it's also why the human animal is so often left out of K-12 biology curriculum. And my experience, here, suggests that that fear is justified! But what's ironic about the creationist plea for getting creation into science classes to better "teach the controversy" and to cover "competing theories" (the only two being creation and evolution) is how my experience suggests that teaching creation alongside evolution is a recipe for losing supporters of the supernatural. (see here)

It's important to notice that the question I ask students is not about whether they believe in God, or are religious or spiritual, or pray or meditate, or whether they believe in a higher power or a greater force or anything like that! It's just asking whether human nature requires supernature. And they're saying no.

Why does that matter? Why am I so proud of them? Here's just one reason: Because if you accept nature, then you somehow understand the delicate role of each species, including humankind, in the ecosystem. And maybe humans who see this more clearly will take more compassionate, human-friendly, and Earth-friendly actions at the market, in the voting booth, and in their communities.

|

| Juno Asking Aeolus to Release the Winds, F. Boucher, Paris, 1769 (Uppity note: The original title was Pull My Finger and Boucher's later variations on this theme incorporated Prometheus.) |

Notes

* Assessment pre- and post-test questions are taken from this article: Cunningham DL, Wescott DJ. Still more "fancy" and "myth" than "fact" in students' conception of evolution. Evolution: Education and Outreach 2009;2:505-517.

**Students are asked to report whether they "strongly agree," "somewhat agree," "somewhat disagree," "strongly disagree," or "no opinion/undecided/never heard of it." I don't report everything here. And I'm lumping "strongly" and "somewhat" categories into just plain "agree" or "disagree."

***Unfortunately another way to read this statement and yet still disagree with it is from the hard core creation perspective: No evolution at all! However, I have few of those students in my course and expect to have fewer of them, not more of them, by the end of the course. That's not because they change their minds about hardcore creation, necessarily, but because the few who'd take my course in the first place would probably drop it before it's over. I will add a question that is better worded to the next round of tests in the Fall semester and I'll keep this one as reference. Something like "Evolution does not explain human existence" would help me gauge how many might disagree with the above question from the minority (no evolution at all) perspective.

Monday, May 28, 2012

Big Science, Stifling Innovation, Mavericks and what to do (if anything) about it

By

Ken Weiss

We want to reply to some of the discussion and dissension related to our recent post about the conservative nature of science and the extent to which it stifles real creativity. Here are some thoughts:

There are various issues afoot here.

In our post, we echoed Josh Nicholson's view that science doesn't encourage innovation, and that the big boy network is still alive and well in science. We think both are generally true, but this doesn't mean that all innovators are on to something big, nor that the big boy network doesn't ever encourage innovation. Some mavericks really are off-target (we could all name our 'favorite' examples) and funding should not be wasted on them. The association of Josh Nicholson with Peter Duesberg apparently has played a role in some of the responses to his BioEssays paper. That specific case somewhat clouds the broader issues, but it was the broader issues we wanted to discuss, not any particular ax Nicholson might have to grind.

One must acknowledge that the major players in science are, by and large, legitimate and do contribute to furthering knowledge, even if they do (or that's how they) build empires. There is nonetheless entrenchment by which these investigators have projects or labs that are very difficult to dislodge. Partly that's because they have a good track record. But only partly. They also typically reach diminishing returns, and the politics are such that the resources cannot easily be moved in favor of newer, fresher ideas.

It's also true that even incremental science is a positive contribution, and indeed most science is almost by necessity incremental: you can't stimulate real innovation without a lot of incremental frustration or data to work from. Scientific revolutions can occur only when there's something to revolt against!

If we suppose that, contrary to fact, science were hyper-democratized such as by anonymizing grant proposers' identities (see this article in last week's Science), the system would be gamed by everyone. Ways would be found to keep the Big guys well funded. Hierarchies would quickly be re-established if the new system stifled them. The same people would, by and large, end up on top. Partly--but only partly--that's because they are good people. Partly, as in our democracy itself, they have contacts, means, leverage, and the like.

And it's very likely that if the system were hyper-democratized a huge amount of funding would be distributed among those who would have trouble being funded otherwise. Since most of us are average, or even mediocre, most of the time, this would be a large expenditure if it were really implemented relative to a major fraction of total resources, contributions likely watered down even further than is the case now. But that kind of broad democratization is inconceivably unlikely. More likely we'd have a tokenism pot, with the rest for the current system.

Historically, it seems likely that most really creative mavericks, the ones whom our post was in a sense defending, often or perhaps typically don't play in the stodgy university system anyway. They drop out and work elsewhere, such as in the start-up business world. To the extent that's true, a redistribution system would mainly fund the hum-drum. Of course, maybe the budgets should just be cut, encouraging more of science to be done privately. Of course, as we say often on MT, there are some fields (we won't name them again here!) whose real scientific contributions are very much less than other fields, because, for instance, they can't really predict anything accurately, one of the core attributes of science.

One can argue about where public policy should invest--how much safe but incremental vs risky and likely to fail but with occasional Bingos!

It is clear from the history of science that the Big guys largely control the agenda and perhaps sometimes for the good, but often for the perpetuation of their views (and resources). This is natural for them to do, but we know very well that our 'Expert' system for policy is in general not a very good one, and we keep paying for go-nowhere research.

Perhaps the anthropological reality is that no feasible change can make much difference. Utopian dreams are rarely realized. Maybe serendipitous creativity just has to happen when it happens. Maybe funding policy can't make it more likely. Such revolutionary insights are unusual (and become romanticized) because they're so rare and difficult.

The kind of conservative hierarchy and tribal behavior are really just a part of human culture more broadly. Still, we feel that the system has to be pushed to correct its waste and conservatism so it doesn't become even more entrenched. Clearly new investigators are going to be in a pinch--in part because the current system almost forces us to create the proverbial 'excess labor pool', because the system makes us need grad students and post-docs to do our work for us (so we can use our time to write grants), whether or not there will be jobs for them.

Again, there is no easy way to discriminate between cranks, mavericks who are just plain wrong, those of us who romanticize our own deep innovative creativity or play the Genius role, and mediocre talent that really has no legitimate claim to limited resources. The real geniuses are few and far between.

A partial fix might be for academic jobs to come with research resources as long as research was part of the conditions for tenure or employment. Much would be wasted on wheel-spinning or trivia, and careerism, of course. But it could at least potentiate the Bell Labs phenomenon, increasing the chance of discovery.

We cannot expect the well-established scientists generally to agree with these ideas unless they are very senior (as we are) and no longer worried about funding....or are just willing to try to tweak the system to make it better. When it's just sour grapes, perhaps it is less persuasive. But sometimes sour grapes are justified, and we should listen!

There are various issues afoot here.

In our post, we echoed Josh Nicholson's view that science doesn't encourage innovation, and that the big boy network is still alive and well in science. We think both are generally true, but this doesn't mean that all innovators are on to something big, nor that the big boy network doesn't ever encourage innovation. Some mavericks really are off-target (we could all name our 'favorite' examples) and funding should not be wasted on them. The association of Josh Nicholson with Peter Duesberg apparently has played a role in some of the responses to his BioEssays paper. That specific case somewhat clouds the broader issues, but it was the broader issues we wanted to discuss, not any particular ax Nicholson might have to grind.

One must acknowledge that the major players in science are, by and large, legitimate and do contribute to furthering knowledge, even if they do (or that's how they) build empires. There is nonetheless entrenchment by which these investigators have projects or labs that are very difficult to dislodge. Partly that's because they have a good track record. But only partly. They also typically reach diminishing returns, and the politics are such that the resources cannot easily be moved in favor of newer, fresher ideas.

It's also true that even incremental science is a positive contribution, and indeed most science is almost by necessity incremental: you can't stimulate real innovation without a lot of incremental frustration or data to work from. Scientific revolutions can occur only when there's something to revolt against!

If we suppose that, contrary to fact, science were hyper-democratized such as by anonymizing grant proposers' identities (see this article in last week's Science), the system would be gamed by everyone. Ways would be found to keep the Big guys well funded. Hierarchies would quickly be re-established if the new system stifled them. The same people would, by and large, end up on top. Partly--but only partly--that's because they are good people. Partly, as in our democracy itself, they have contacts, means, leverage, and the like.

And it's very likely that if the system were hyper-democratized a huge amount of funding would be distributed among those who would have trouble being funded otherwise. Since most of us are average, or even mediocre, most of the time, this would be a large expenditure if it were really implemented relative to a major fraction of total resources, contributions likely watered down even further than is the case now. But that kind of broad democratization is inconceivably unlikely. More likely we'd have a tokenism pot, with the rest for the current system.

Historically, it seems likely that most really creative mavericks, the ones whom our post was in a sense defending, often or perhaps typically don't play in the stodgy university system anyway. They drop out and work elsewhere, such as in the start-up business world. To the extent that's true, a redistribution system would mainly fund the hum-drum. Of course, maybe the budgets should just be cut, encouraging more of science to be done privately. Of course, as we say often on MT, there are some fields (we won't name them again here!) whose real scientific contributions are very much less than other fields, because, for instance, they can't really predict anything accurately, one of the core attributes of science.

One can argue about where public policy should invest--how much safe but incremental vs risky and likely to fail but with occasional Bingos!

It is clear from the history of science that the Big guys largely control the agenda and perhaps sometimes for the good, but often for the perpetuation of their views (and resources). This is natural for them to do, but we know very well that our 'Expert' system for policy is in general not a very good one, and we keep paying for go-nowhere research.

Perhaps the anthropological reality is that no feasible change can make much difference. Utopian dreams are rarely realized. Maybe serendipitous creativity just has to happen when it happens. Maybe funding policy can't make it more likely. Such revolutionary insights are unusual (and become romanticized) because they're so rare and difficult.

The kind of conservative hierarchy and tribal behavior are really just a part of human culture more broadly. Still, we feel that the system has to be pushed to correct its waste and conservatism so it doesn't become even more entrenched. Clearly new investigators are going to be in a pinch--in part because the current system almost forces us to create the proverbial 'excess labor pool', because the system makes us need grad students and post-docs to do our work for us (so we can use our time to write grants), whether or not there will be jobs for them.

Again, there is no easy way to discriminate between cranks, mavericks who are just plain wrong, those of us who romanticize our own deep innovative creativity or play the Genius role, and mediocre talent that really has no legitimate claim to limited resources. The real geniuses are few and far between.

A partial fix might be for academic jobs to come with research resources as long as research was part of the conditions for tenure or employment. Much would be wasted on wheel-spinning or trivia, and careerism, of course. But it could at least potentiate the Bell Labs phenomenon, increasing the chance of discovery.

We cannot expect the well-established scientists generally to agree with these ideas unless they are very senior (as we are) and no longer worried about funding....or are just willing to try to tweak the system to make it better. When it's just sour grapes, perhaps it is less persuasive. But sometimes sour grapes are justified, and we should listen!

Friday, May 25, 2012

You scientist, we want you to get ahead....but not too FAR ahead!

A paper in the June issue of BioEssays is titled "Collegiality and careerism trump critical questions and bold new ideas" and, no, we didn't write it. The subtitle is "A student’s perspective and solution": the author is Joshua Nicholson, a grad student at Virginia Polytech. It's a mark of the depth of the problem that it is recognized and addressed by a student, in a very savvy and understanding way. But of course it's students who will most feel its impact as they begin their careers when money is tight and the old boy network is alive and well. The situation is so critical today that even a student can sense it without the embittering experience of years of trying to build a post-training career.

The US National Institutes of Health and National Science Foundation both pay lip service to innovation, yes, but still within the same system of application and decision-making. Nicholson says that the NIH and NSF in fact admit that these efforts are not encouraging innovation (as those of us who have been on such panels and never seen an original project actually funded--usually the reviewers pat the proposer on the head patronizingly and say make it safe and resubmit). He blames this, correctly, on the review structure; peer review. Yes, experts in a field are required to evaluate new ideas, but it is they who are often most unwilling to accept them.

To be fair, usually this is not explicit and reviewers may usually not even be aware of their inertial resistance to novelty. But Nicholson explains that:

He goes on to say that the system not only encourages safe science, but cronyism as well. We would add that this includes hierarchies, which foster obedience by many to the will of the few. Because the researcher's affiliation, collaborators, co-authors, publication record and so on are a part of the whole grant package, it's impossible for reviewers to not use this information in their judgments and review a grant impartially. As Nicholson puts it, the whole emphasis is on a scientist "being liked" by the scientific community. Negative findings are rarely published, which in effect means that scientists can't disagree with each other in print, and peer review ensures that scientists stay within the fold. Nicholson believes this has all created a culture of mediocrity in science. We can say from experience that submitting grants anonymously is unlikely to work because, like 'anonymous' manuscripts sent out for review, one can almost always guess the authors.

There is of course a problem. Most off-center science is going to go nowhere. Real innovation is a small fraction of ideas that claim it (sincerely or as puffery). Accepted wisdom has been hard-won and that's a legitimate reason to resist. So not all those whose ideas are off base are brilliant or right. How one tells in advance is the question that's a problem because there's no good way, and that provides a ready-made excuse for generic resistance.

Nicholson's solution to restructuring "the current scientific funding system, to emphasize new and radical work"? He proposes that the grant review system change to include non-scientists who don't understand the field, as well as scientists who do. "Indeed," he says, "the participation of uninformed individuals in a group has recently been shown to foster democratic consensus and limit special interests." And, "crowd funding" has been successful in a lot of non-scientific arenas, he notes, and could conceivably be used to fund grants as well.

It will come as no surprise to regular MT readers to know that we endorse Joshua Nicholson's indictment of the current system. Peer review seems necessary, brilliant and democratic, and it was established largely and explicitly to break up and prevent Old Boy networking, and make public research funding more 'public'. Indeed, money no longer goes quite so exclusively to the Elite universities. But politicians promise things to get a crowd of funders, who want the rewards. And even that, like any system, can be gamed, and a pessimist (or realist?) is likely to argue that after you've relied on it for a while, it produces just the kind of stale, non-democratic, old boy network that Nicholson describes--similar hierarchies even with many of the same hierarchs resurfacing.

It's unlikely that the grant system will undergo radical transformation any time soon, because too many people would have to be dislodged, though, perhaps when the old goats retire and get out of the way, that will smooth the way. But there are rumblings in the world of scientific publishing, and demands for change, and this makes us hopeful that perhaps these growing challenges to the system can have widespread effects in favor of innovation and a more egalitarian sharing of the wealth (in the form of academic positions, grant money, publications, and so on). The demands are coming from scientists boycotting Elsevier Publishing because they are profiting handsomely from the scientists' free labor; scientists and others petitioning for open and free access to papers publishing the results of studies paid for by the taxpayer; physicists circumventing the old-boy peer review process by publishing online or first passing their manuscripts through open-ended peer review online. And, yes, there are open access journals (e.g., PLoS), though generally at high cost.

The system probably can't change too radically so long as science costs money and research money doesn't come along with salary as part of an academic job, as it probably should since research is required for the job! Instead, the opposite is true: universities hunger for you to come do your science there largely because they expect you to bring in money (they live on the overhead)! And humans are tribal animals so the fact that who you know is such an intrinsic part of the scientific establishment is not a surprise--but that aspect of the system can and should be changed. The reasons that science has grown into the lumbering, conservative, money-driven, careerist megalith that it is can be debated, as can the degree to which it is delivering the goods, even if imperfectly. But it is possible that we're beginning to see glimmers of hope for change. The best science is at least sometimes unconventional, and there must be rewards for that as well.

As students we are taught principles and ideals in classrooms, yet as we advance in age, experience, and career, we learn that such lessons may be more rhetoric than reality.Nicholson is not the first to notice that the current system of funding and rewards encourages more of the same, not innovation. Scientists, he notes, are discouraged from having radical, or even new ideas in everything from grant applications to even just expression of ideas. Indeed, numerous examples exist of brilliant scientists who have said they couldn't have done their work within the system; Darwin, Einstein, and whatever you think of Gaia, its conceptor, but also innovative inventor, James Lovelock, has said the same (and he did so recently on BBC Radio 4's The Life Scientific). Other creative people, in the arts, have felt the same way about universities (e.g., Wordsworth the poet, Goya the painter).

The US National Institutes of Health and National Science Foundation both pay lip service to innovation, yes, but still within the same system of application and decision-making. Nicholson says that the NIH and NSF in fact admit that these efforts are not encouraging innovation (as those of us who have been on such panels and never seen an original project actually funded--usually the reviewers pat the proposer on the head patronizingly and say make it safe and resubmit). He blames this, correctly, on the review structure; peer review. Yes, experts in a field are required to evaluate new ideas, but it is they who are often most unwilling to accept them.

To be fair, usually this is not explicit and reviewers may usually not even be aware of their inertial resistance to novelty. But Nicholson explains that:

(i) they helped establish the prevailing views and thus believe them to be most correct, (ii) they have made a career doing this and thus have the most to lose, and (iii) because of #1 and #2 they may display hubris [2–4, 9, 10]. If, historically, most new ideas in science have been considered heretical by experts [11], does it make sense to rely upon experts to judge and fund new ideas?He concludes that a student looking to build a career therefore must choose between getting funding by following the crowd and doing more of the same, or being innovative but without any money... that is, driving a taxi.

He goes on to say that the system not only encourages safe science, but cronyism as well. We would add that this includes hierarchies, which foster obedience by many to the will of the few. Because the researcher's affiliation, collaborators, co-authors, publication record and so on are a part of the whole grant package, it's impossible for reviewers to not use this information in their judgments and review a grant impartially. As Nicholson puts it, the whole emphasis is on a scientist "being liked" by the scientific community. Negative findings are rarely published, which in effect means that scientists can't disagree with each other in print, and peer review ensures that scientists stay within the fold. Nicholson believes this has all created a culture of mediocrity in science. We can say from experience that submitting grants anonymously is unlikely to work because, like 'anonymous' manuscripts sent out for review, one can almost always guess the authors.

There is of course a problem. Most off-center science is going to go nowhere. Real innovation is a small fraction of ideas that claim it (sincerely or as puffery). Accepted wisdom has been hard-won and that's a legitimate reason to resist. So not all those whose ideas are off base are brilliant or right. How one tells in advance is the question that's a problem because there's no good way, and that provides a ready-made excuse for generic resistance.

Nicholson's solution to restructuring "the current scientific funding system, to emphasize new and radical work"? He proposes that the grant review system change to include non-scientists who don't understand the field, as well as scientists who do. "Indeed," he says, "the participation of uninformed individuals in a group has recently been shown to foster democratic consensus and limit special interests." And, "crowd funding" has been successful in a lot of non-scientific arenas, he notes, and could conceivably be used to fund grants as well.

It will come as no surprise to regular MT readers to know that we endorse Joshua Nicholson's indictment of the current system. Peer review seems necessary, brilliant and democratic, and it was established largely and explicitly to break up and prevent Old Boy networking, and make public research funding more 'public'. Indeed, money no longer goes quite so exclusively to the Elite universities. But politicians promise things to get a crowd of funders, who want the rewards. And even that, like any system, can be gamed, and a pessimist (or realist?) is likely to argue that after you've relied on it for a while, it produces just the kind of stale, non-democratic, old boy network that Nicholson describes--similar hierarchies even with many of the same hierarchs resurfacing.

It's unlikely that the grant system will undergo radical transformation any time soon, because too many people would have to be dislodged, though, perhaps when the old goats retire and get out of the way, that will smooth the way. But there are rumblings in the world of scientific publishing, and demands for change, and this makes us hopeful that perhaps these growing challenges to the system can have widespread effects in favor of innovation and a more egalitarian sharing of the wealth (in the form of academic positions, grant money, publications, and so on). The demands are coming from scientists boycotting Elsevier Publishing because they are profiting handsomely from the scientists' free labor; scientists and others petitioning for open and free access to papers publishing the results of studies paid for by the taxpayer; physicists circumventing the old-boy peer review process by publishing online or first passing their manuscripts through open-ended peer review online. And, yes, there are open access journals (e.g., PLoS), though generally at high cost.

The system probably can't change too radically so long as science costs money and research money doesn't come along with salary as part of an academic job, as it probably should since research is required for the job! Instead, the opposite is true: universities hunger for you to come do your science there largely because they expect you to bring in money (they live on the overhead)! And humans are tribal animals so the fact that who you know is such an intrinsic part of the scientific establishment is not a surprise--but that aspect of the system can and should be changed. The reasons that science has grown into the lumbering, conservative, money-driven, careerist megalith that it is can be debated, as can the degree to which it is delivering the goods, even if imperfectly. But it is possible that we're beginning to see glimmers of hope for change. The best science is at least sometimes unconventional, and there must be rewards for that as well.

Thursday, May 24, 2012

Magical science: now you see it, now you don't. Part I: What we mean by 'risk'

By

Ken Weiss

The life, social, and evolutionary sciences have a problem. We posted about the issue of their non-replicability last Friday but that is only part of the problem. They also have non-predictability (see a recent Times commentary), but both replicability and predictability are key elements of science as we know it.

It is difficult to make rigorous assertions that have the kind of predictive power we have come (rightly or wrongly) to expect of science, on the typical if often unstated assumption that our world is law-like, the way it seems that the physical and chemical universe are.

A clear manifestation of the problem is the way that findings in epidemiology and genetic risk say yes factor X is risky, then a few years later no, then yes again, and so on. Can't we ever know if factor X is a cause of some outcome Y? In particular, is X a risk factor? X could be a reason for natural selection, a component of some disease, and so on.

Often, we think of this as a probability. As we've posted before, that means that exposure to that factor yields some probability that the outcome will be observed. If you have two copies of a gene, there is usually a 50% risk or probability that a given one of your children (or parents, or sibs) will also have it.

So, in a strange turn of phrase, coffee or a given HDL cholesterol level is said to be a risk factor for heart disease, or a given genetic variant is a risk factor for cancer. And we try to estimate the level of that risk--the probability that if that factor is present, you will manifest the outcome. Among various possible risk factors, the modern concept of science has it that you are at some net or overall risk of the outcome, like having a heart attack, depending on your exposure to those risk factors.

In evolutionary terms, having a particular genetic variant can have some probability (or some similar measure) of reproducing, or surviving to a particular age. Among various possible genetic risk factors, what you have puts you at some net risk of such outcome, which is your evolutionary fitness in the face of natural selection, for example.

If we assume that enumerated causes of this sort, and that they really are causes, are responsible for a trait then your exposure level can be specified. The causes might truly be deterministic, in the way gravity determines the rate an object will fall--here or anywhere in the universe--but that our incomplete level of knowledge is such that we can only express its effect in terms of probability.

Still, we assume that probabilistic causation is real. When things are the result of probabilities, we can know the causes but can't predict the specific outcome of any given instance. This is the sense in which we know a fair coin will come up Heads 50% of the time, but can't predict the result of a given flip. Actually, and we've posted about this before but the issue of probability is so central to much of science that we keep repeating it, the coin may be perfectly deterministic but we just don't know enough, so that for all practical purposes the result is probabilistic.

In such cases, which are clearly at the foundation of evolutionary inference and of genetic and other biomedical problems, we must estimate the risk associated with a given cause by choosing a sample from all those at risk, and seeing what happened to them. Then, we assume we know the causal structure and can then do what we must be able to do, if this is actual science: predict the outcome. This must be so if the world is causal, even if our predictions are expressed in terms of probabilities: given your genotype you have xx probability of getting yy disease.

So, with our huge and munificently funded science establishment, why is it that day after day the media tout the latest Dramatic Finding....that is just as noisily touted the next day when the previous assertion is overturned?

Why is it that we don't know if coffee is a risk factor for disease? Or isn't? Or isn't for the moment until some new study comes along? Or maybe until some environmental factor changes, like the type of filter paper McDonald's uses in its coffee maker, say--but how would we ever know whether that explains the flip-flopping findings?

Why indeed do we have to continue doing studies of the same purported risk factor to see if they are really, truly risks? These are fundamental questions not about the individual studies, but about the current practice of science itself.

If we look at the reasons, which is tomorrow's post, we'll see how shaky our knowledge really is in these areas, and we can ask whether it is even 'science'.

It is difficult to make rigorous assertions that have the kind of predictive power we have come (rightly or wrongly) to expect of science, on the typical if often unstated assumption that our world is law-like, the way it seems that the physical and chemical universe are.

A clear manifestation of the problem is the way that findings in epidemiology and genetic risk say yes factor X is risky, then a few years later no, then yes again, and so on. Can't we ever know if factor X is a cause of some outcome Y? In particular, is X a risk factor? X could be a reason for natural selection, a component of some disease, and so on.

Often, we think of this as a probability. As we've posted before, that means that exposure to that factor yields some probability that the outcome will be observed. If you have two copies of a gene, there is usually a 50% risk or probability that a given one of your children (or parents, or sibs) will also have it.

So, in a strange turn of phrase, coffee or a given HDL cholesterol level is said to be a risk factor for heart disease, or a given genetic variant is a risk factor for cancer. And we try to estimate the level of that risk--the probability that if that factor is present, you will manifest the outcome. Among various possible risk factors, the modern concept of science has it that you are at some net or overall risk of the outcome, like having a heart attack, depending on your exposure to those risk factors.

In evolutionary terms, having a particular genetic variant can have some probability (or some similar measure) of reproducing, or surviving to a particular age. Among various possible genetic risk factors, what you have puts you at some net risk of such outcome, which is your evolutionary fitness in the face of natural selection, for example.

If we assume that enumerated causes of this sort, and that they really are causes, are responsible for a trait then your exposure level can be specified. The causes might truly be deterministic, in the way gravity determines the rate an object will fall--here or anywhere in the universe--but that our incomplete level of knowledge is such that we can only express its effect in terms of probability.

Still, we assume that probabilistic causation is real. When things are the result of probabilities, we can know the causes but can't predict the specific outcome of any given instance. This is the sense in which we know a fair coin will come up Heads 50% of the time, but can't predict the result of a given flip. Actually, and we've posted about this before but the issue of probability is so central to much of science that we keep repeating it, the coin may be perfectly deterministic but we just don't know enough, so that for all practical purposes the result is probabilistic.

In such cases, which are clearly at the foundation of evolutionary inference and of genetic and other biomedical problems, we must estimate the risk associated with a given cause by choosing a sample from all those at risk, and seeing what happened to them. Then, we assume we know the causal structure and can then do what we must be able to do, if this is actual science: predict the outcome. This must be so if the world is causal, even if our predictions are expressed in terms of probabilities: given your genotype you have xx probability of getting yy disease.

So, with our huge and munificently funded science establishment, why is it that day after day the media tout the latest Dramatic Finding....that is just as noisily touted the next day when the previous assertion is overturned?

Why is it that we don't know if coffee is a risk factor for disease? Or isn't? Or isn't for the moment until some new study comes along? Or maybe until some environmental factor changes, like the type of filter paper McDonald's uses in its coffee maker, say--but how would we ever know whether that explains the flip-flopping findings?

Why indeed do we have to continue doing studies of the same purported risk factor to see if they are really, truly risks? These are fundamental questions not about the individual studies, but about the current practice of science itself.

If we look at the reasons, which is tomorrow's post, we'll see how shaky our knowledge really is in these areas, and we can ask whether it is even 'science'.

Wednesday, May 23, 2012

Just-So stories, revisited (again!): not even lactase?

By

Ken Weiss

A lot of us love to spice up our publications, and our contributions to the media circus, with nice, taut, complete, simple adaptive evolution stories. Selection 'for' this trait happened because [*whatever nice story we make up*].

The media love melodrama, which sells, and our stories make us seem like intrepid insightful adventurers--those 'selective sweeps' that we declare, by which the force of some irresistible allele (variant at a single gene) devastates all its competitors, sometimes over the whole world, suggest such epic tales.

The fact that we know from decades of experience that the stories we have made up are unlikely to be true doesn't slow down our juggernaut of such stories. But one of the things we here on MT are here to do is ask how true they actually are--or, if we're not sure, why we assert them with such confidence. And here's one of the current, newly classic, human Just-So stories:

Lactase persistance and milk-drinking adults

Mammals when weaned generally lose the ability to digest milk. Adults usually can't break down the milk sugar, lactose, and the result (so the story goes) is grumbly gut and, one might put it, farty digestion leading to early death or (understandably!) failure to find mates and so reduced fitness. The reason is that while infants produce the enzyme lactase that in their gut breaks down lactose, the lactase gene is switched off by adulthood: if we don't drink milk we don't need it, and there'd be no mechanism to keep the lactase gene being expressed.

The gene is called LCT and its regulation, due to DNA regulatory sequence located nearby in the genome, is what determines whether gut cells do or don't use the gene. How, as a mammalian infant ages, this gene is gradually shut down is not well understood. And while this is true as a rule for humans, there are exceptions. The first that was carefully studied was the high fraction of Europeans, and especially northern Europeans such as Scandinavians, who can tolerate milk as adults (most people elsewhere, except in parts of Africa--see below--lose this ability, so the story goes).

The frequency of the Lactase Persistence allele (genetic variant), call it LP, is highest in northern Europe, and diminishes towards the middle East. The genetic and archeological evidence have been interpreted as showing that the LP variant first arose by mutation in the middle East--say, somewhere around Anatolia (Turkey)--and spread gradually, along with dairying, into Europe. Because of a supposed selective advantage, there was a sweep of LP as this occurred, so that by the time one gets to Scandinavia the LP frequency is high because, the story went, they had been dairying there for thousands of years. A roughly 1% natural selection advantage is assumed for this gradual, continent-wide increase in LP's frequency. One can make up stories about how adults would live longer or rutt more successfully if they could slug down their domestic ruminants' fluids, but those are difficult to prove (or refute).

Anyway, this was the adaptive scenario. When I talked to various clinicians in Finland, they generally dismissed the idea that inability to digest milk was harmful in any serious or life-threatening way to those Finns who by bad luck did not carry the LP variant. And when I ask Asian students in my classes if they can drink milk (they're not supposed to be able to according to the adaptive story) they almost all say 'yes' and are curious why I should ask such a question! So I had my doubts, and for this and other reasons as well.

Even though all the evidence that LP had arisen in Anatolia and

risen in frequency, the idea of a steady 1% advantage all across Europe

over thousands of years, seemed at least speculative--that is, even if

there was a selective advantage for some reason--the story was rather pat, like so many selection stories. A demonstrated fitness-related advantage of adult milk-drinking was asserted but simply wasn't shown. Perhaps the reason for the high frequency of this LP trait was only an indirect result of something being selected for nearby on the same chromosome, or some other reason than nutrition why LP variant would have been favored. Indeed, there was even evidence from residues in Anatolian pottery that milk sugar was cooked into digestibility long ago--so a genetic adaptation to it wasn't even necessary. Regardless of these details, the story stuck. It made a good tale for news media and textbooks alike.

Even though all the evidence that LP had arisen in Anatolia and

risen in frequency, the idea of a steady 1% advantage all across Europe

over thousands of years, seemed at least speculative--that is, even if

there was a selective advantage for some reason--the story was rather pat, like so many selection stories. A demonstrated fitness-related advantage of adult milk-drinking was asserted but simply wasn't shown. Perhaps the reason for the high frequency of this LP trait was only an indirect result of something being selected for nearby on the same chromosome, or some other reason than nutrition why LP variant would have been favored. Indeed, there was even evidence from residues in Anatolian pottery that milk sugar was cooked into digestibility long ago--so a genetic adaptation to it wasn't even necessary. Regardless of these details, the story stuck. It made a good tale for news media and textbooks alike.More evidence?

But now a new paper, by several Finnish authors, has appeared in the Spring 2012 issue of Perspectives in Biology and Medicine, and it suggests something different. It suggests that milk usage hasn't been present in northern Europe for nearly long enough to explain the LP frequency. Their idea is that the variant spread into northern Europe, along with people, but that they brought the high LP frequency with them: it had already risen to high frequency there.

It is much easier to envision a combination of luck and selection, in some relatively localized population in Turkey or thereabouts, gradually adopting and adapting to adult dairying, which then spread as they expanded their territory in part because of their advanced farming technology, then it is to envision a continent-wide, steady selective story about milk-drinking.

The point here is that, if the new paper is right, even lactase persistence, which has become the classic exemplar of recent human adaptation (displacing even sickle cell and malaria in the public media), is simply too simplified. Can't we even get that one right?

Probable truth

One has to say that the idea of lactase persistence being evolutionarily associated with dairying culture probably means something. East African populations that have a history of dairying also have a similar LP persistence, that confers at least some higher-level of digestibility of lactose into adulthood--but this is for different mutations affecting the gene's regulation, not an historical connection to the Anatolian story, nor shared with surrounding non-dairying population. Similar selective advantage has been estimated for the African population.

So either the lactose digestion story is basically correct, even if some details are still debatable, or this is only a coincidence. Or here, too, some other effect of this chromosome region was involved. Two such kinds of coincidence seem rather unlikely.

Thus, while some aspects of this story may be correct, the point is that even in the classic supposed clear-cut example of recent human evolution, that has been intensely studied in modern genomic detail, maybe we still haven't got it right in important ways.

The message is not to dismiss the LP story, but we should temper the confidence with which simple selection stories are offered--in general.

Why be a spoil-sport?

Unfortunately, there's no reward for humility in our society. We personally think that claims should be tempered, so that we as a scientific community, and the community that pays for science, can more accurately and quickly tell what is worth following up with further funds, and why. We also feel that our understanding of the universe we live in, and the system of life of which we are a part, is the job of science--and the job of universities is to educate. To us, that means provide students with the best understanding we currently have about the nature of life, history, arts, and technologies, not to pass on a superficial lore.

This is, we know, rather naive! We also know that neither we, nor a puny blog like this, is going to have any serious impact. But if we can keep these issues before the eyes of at least some conscientious people, even if we are regularly arguing for less rather than more claims, and regularly pointing to what seem to us to be deeper questions worthy of investigation, then we have hopefully done at least some service.

Tuesday, May 22, 2012

Slot machines and thoughts: neural determinism?

By

Ken Weiss

Coin flips are probabilistic for all practical purposes (unless you learn how to "predetermine" the outcome, here). By 'probabilistic' we mean that the outcome of any given flip can only be stated as a probability, such as 50% chance of Heads: we can't say that a Heads will or won't occur. This is for all practical purposes, since if we knew the exact values of all the variables involved, standard physics can predict the outcome with, with complete certainty. Machines have been built to show this, as we've posted about before (e.g., here).

Slot machines are (purportedly) random dial-spinners that stop in ultimately random ways (that are adjusted for particular pre-set overall payoff levels, but not individual spins). In this sense, the slot machine is nearly a random device, but even the computer-based random number generator of modern slot machines is not 100% random and, in a sense, every spin could be predicted at least in principle.

Slot machines are (purportedly) random dial-spinners that stop in ultimately random ways (that are adjusted for particular pre-set overall payoff levels, but not individual spins). In this sense, the slot machine is nearly a random device, but even the computer-based random number generator of modern slot machines is not 100% random and, in a sense, every spin could be predicted at least in principle.

So, as far as anybody can tell in practice, each flip or each jerk of the one-armed bandit, is random. We still can say much about the results: We can't predict a given coin flip or slot-pull, but we can predict the overall net result of many pulls, to within some limits based on statistical probability theory--though never perfectly.

On the other hand, a casino is a collection of numerous devices (roulette wheels, poker tables, slot machines, and so on). Each is of the same probabilistic kind. Nobody would claim that the take of a casino was not related to these devices, not even those who believe that each one is inherently probabilistic. To think that would be to argue that something other than physical factors made up a casino.

But the take of a casino on any given day cannot be predicted from an enumeration of its devices! The daily take is the result of how much use was made of each device, of the decision-making behavior of the players, of the particular players that were there that day, of how much they were willing to lose, and so on. The daily take is an 'emergent' property of the assembled items. Interestingly, nonetheless, the pattern of daily takes can be predicted at least within some limits. This is the mysterious connection between full predictability and emergence, and it is a central fact of the life sciences.

Genes exist and they do things. On average, we can assess what a gene does. Clearly genes underlie what a person is and does. But each gene's net impact on some trait depends not just on itself, but on the rest of the genes in the same person's genome, and countless other factors. A particular individual's particular action is simply not predictable with precision from its genome (or, for that matter, its genome and measured environmental factors). There are simply too many factors and we can't assess their individual action in individual cases, except within what are usually very broad limits.

Brain games

A common current application of the issues here is to be found in neurosciences. There is a firm if not fervid belief that if we enumerate everything about genes and brains we'll be able to show that, yes, you're just a chemical automaton. Forget about the delusion of free will!

A story last week in the NY Times largely asserts that behavior is

going to be predictable from the 'amygdala', a section of the brain.

There is also a story suggesting that psychopaths can be identified

early in life. And there are frequent papers about let's call it 'econogenomics', claiming they will save the day by showing how our

genomes determine our economic behavior.