This is the third and final post on a recent article in The Land Institute's Land Report, describing advances and methods to identify and isolate desirable genetic variation in plant species, with the goal of sustainable agriculture by scientific, but efficient, methods.

This is pure modern genetics, combined with traditional Mendelian-based empirical breeding as has been practiced empirically over many thousands of years and formally since the mid-19th century.

The discussion is relevant to the nature and effects of natural selection, which, unlike breeding choice, is not molecularly specific and is generally weak. That's why it's difficult to find desirable individual plants in the sea of natural variation, and why intentional breeding is so relatively effective: with a trait in mind, we can pick the few individual plants that we happen to like, and then isolate them for many generations, under controlled circumstances, from members of their species without that trait.

By contrast, natural selection seems usually to act very slowly. Among other things, if selection were too harsh, then perhaps a few lucky genotypes would do well, but the population would be so reduced as to be vulnerable to extinction. Strong selection can also reduce variation unrelated to the selected trait, and make the organisms less responsive to other challenges of life. If environments usually change slowly, selection can act weakly and achieve adaptations (though some argue that selection has its main, more dramatic effects very locally).

With slow selection, even if consistent over many generations, variation arises at many different genes that can affect a trait in the favored direction. Over time, much of the genome may come to have variants that are helpful. But they may do this silently: even if variation at each of them still exists, there can be so many different 'good' alleles that most individuals inherit enough of them to do well enough to survive. But the individual alleles' effects may be too small to detect in any practical way.

These facts explain, without any magic or mystical arguments about causation, why there is so much variation affecting many traits of interest and why their genetic basis is elusive to mapping approaches such as GWAS (genomewide association studies).

Of course, even highly sophisticated breeding doesn't automatically address variable climate, diet, etc. conditions which can be relevant--indeed, critical, to a strain's qualities. Molecular breeding is much faster than traditional breeding, but still takes many generations. Think about this: even if only 10 generations, in humans that would mean it would take250 years (the age of the USA as a country) to achieve a result for a given set of conditions. So how could this kind of knowledge be used in humans....other than by molecular based eugenics (selective abortion or genotype-based marriage bans)--days we surely don't want to return?

Breeders might eventually fix hundreds of alleles with modern, rapid molecularly informed methods. But we can't do that in humans, nor as a rule identify the individual alleles, because our replicate samples come not from winnowing down over generations in a closed, or controlled, breeding population, but from new sampling of extant variation each generation, in a natural population.

The data and molecular approaches seem similar in human biomedical and evolutionary genetics, but the problem is different. As currently advocated, both pharma and 'personalized genomic medicine' essentially aim at predictions in individuals, based on genotype, or treatment that targets a specific gene (pharma will wise up about this where it doesn't work, of course, but lifetime predictions in humans could take decades to be shown to be unreliable).

It's hard enough to evaluate 'fitness' in the present, much less the past, or to predict biomedical risk from phenotype data alone, though such data are the net result of the whole genome's contributions and should be of predictive value. So how to achieve such prediction based on specific genotypes in uncontrolled, non-experimental conditions, if that is a reasonable goal, is not an easy question.

In ag species, if a set of even weak signals can be detected reliably in Strain B, they can be introduced into a stock A strain by selective breeding. It need not matter that the signals that only explain a fraction of the desired effect in strain B aren't detected by the mapping effort because repeated iteration of the process can achieve desired ends. With humans, risk can be predicted to some extent, from GWAS and similar approaches. But so far most of the genetic contribution detected has been elusive, weakening the power of prediction.

In humans, the equivalent question is perhaps how and when molecular-assisted prediction will work well enough in the biomedical context, or in the context of attempting to project phenogenetic correlations back into the deep evolutionary past accurately enough to be believable. Perhaps we need to think of other approaches. Aggregate approaches under experimental conditions is great for wheat. But humans are not wheat.

Thursday, March 31, 2011

Wednesday, March 30, 2011

Learning the lessons of the Land: part II

By

Ken Weiss

This series of commentaries (beginning yesterday) was inspired by the latest issue of the Land Institute's Land Report, that describes efforts to use modern science to develop sustainable crops that can conserve resources yet feed large numbers of people. We were motivated by the thought that not only is this important work, but it should inform our ideas about human--and evolutionary--genetics.

In our previous post we introduced the idea of molecular breeding, a genomewide association study (or GWAS)-like approach that experimental breeders in agriculture are taking to speed up and focus their efforts to breed desired traits into agricultural plants. Here, we want to continue that discussion, to relate the findings made in agricultural genetics to what is being promised for GWAS-like based personalized genomic medicine.

Essential personalized medicine means predicting your eventual disease-related phenotypes from your inherited genotype (and here, we'll extend that beyond just DNA sequence, to epigenetic aspects of DNA modification, assuming that will eventually be identifiable from appropriate cells).

If breeders had been finding that once seed with desired traits had been identified, genome-spanning genetic markers (polymorphic sites along the genome) pointed to a small number of locations with big effects, then we would quickly be able to find, and perhaps use the actual genes diagnostically. This seems to be true for some plants with small genomes, for traits that seem to be due to the action of variants in one or only a few genes. This is just what we find for the 'simple' human diseases, or the subset of complex diseases that segregates in families in a way that follows Mendel's principles of inheritance. There are many examples.

But for many traits, including most complex, delayed onset, life-style related, common disorders that are the main target of the GWAS-ification of medicine in the Collins era of NIH funding, what is being found is quite different. Mapping is finding hundreds of genes, almost all of which have either very small individual effects, or if larger effects, that are so rare that they are of minimal public health importance (even if very important to those who carry the dangerous allele). For these, the question is what to do with the countless, variable regions of the genome that make up the bulk of the inherited risk.

This is the same situation as faced in the agricultural breeding arena, for many of the traits, like water- or drought- or pest-tolerance, nutrient yield, or other characteristics desired for large scale farming. The traits are genomically complex. Even with large samples and controlled and uniform conditions--very unlike the human biomedical situation--it is not practicable or practical to try to improve the trait by individual gene identification. Nor is it likely that introducing single exotic transgenes will do the trick (as many agribusinesses are acknowledging).

Instead, molecular breeding takes advantage of the plant's own natural variation to select those variants that do what is desired simply by choosing the plants that transmit those variants, and without attempting to engineer or even to identify what they are. We do not know how reliable the prediction of phenotype from genotype in these circumstances typically is, but the idea is if that you keep selecting plants with the desired regions of the genome that mapping identifies, and breeding them for example with strains that you like for other reasons, you can reduce reliance on individual prediction because eventually every individual will be alike, for the traits you were interested in.

Once that is the case, regardless of the genes or regulatory regions that are involved, you have your desired plants, at least under the conditions of nutrients, climate, and so on, in which the strain was developed.

Clearly this experience is relevant for human genetics, and for evolutionary genetics of the same traits, a topic to which we will turn in our final post in this series.....

|

| Van Gogh, Farmhouse in a Wheat Field, public domain |

Essential personalized medicine means predicting your eventual disease-related phenotypes from your inherited genotype (and here, we'll extend that beyond just DNA sequence, to epigenetic aspects of DNA modification, assuming that will eventually be identifiable from appropriate cells).

If breeders had been finding that once seed with desired traits had been identified, genome-spanning genetic markers (polymorphic sites along the genome) pointed to a small number of locations with big effects, then we would quickly be able to find, and perhaps use the actual genes diagnostically. This seems to be true for some plants with small genomes, for traits that seem to be due to the action of variants in one or only a few genes. This is just what we find for the 'simple' human diseases, or the subset of complex diseases that segregates in families in a way that follows Mendel's principles of inheritance. There are many examples.

But for many traits, including most complex, delayed onset, life-style related, common disorders that are the main target of the GWAS-ification of medicine in the Collins era of NIH funding, what is being found is quite different. Mapping is finding hundreds of genes, almost all of which have either very small individual effects, or if larger effects, that are so rare that they are of minimal public health importance (even if very important to those who carry the dangerous allele). For these, the question is what to do with the countless, variable regions of the genome that make up the bulk of the inherited risk.

This is the same situation as faced in the agricultural breeding arena, for many of the traits, like water- or drought- or pest-tolerance, nutrient yield, or other characteristics desired for large scale farming. The traits are genomically complex. Even with large samples and controlled and uniform conditions--very unlike the human biomedical situation--it is not practicable or practical to try to improve the trait by individual gene identification. Nor is it likely that introducing single exotic transgenes will do the trick (as many agribusinesses are acknowledging).

Instead, molecular breeding takes advantage of the plant's own natural variation to select those variants that do what is desired simply by choosing the plants that transmit those variants, and without attempting to engineer or even to identify what they are. We do not know how reliable the prediction of phenotype from genotype in these circumstances typically is, but the idea is if that you keep selecting plants with the desired regions of the genome that mapping identifies, and breeding them for example with strains that you like for other reasons, you can reduce reliance on individual prediction because eventually every individual will be alike, for the traits you were interested in.

Once that is the case, regardless of the genes or regulatory regions that are involved, you have your desired plants, at least under the conditions of nutrients, climate, and so on, in which the strain was developed.

Clearly this experience is relevant for human genetics, and for evolutionary genetics of the same traits, a topic to which we will turn in our final post in this series.....

Tuesday, March 29, 2011

Learning the lessons of the Land: part I

By

Ken Weiss

This post is inspired by the latest issue of The Land Report, the thrice-yearly report by The Land Institute, of Salina, Kansas. This organization is dedicated to research into developing sustainable agriculture that can conserve water and topsoil, reduce industrial energy dependence, and yet produce the kind of large-yield crops that are needed by the huge human population.

An article in the spring 2011 issue concerns an approach called molecular breeding. Here the idea is to speed up traditional empirical breeding to improve crops, as a different (and better, they argue) means than traditional GM transgenic approaches, that insert a gene--often from an exotic species such as a bacterium--into the plant genome.

The important point for Mermaid's Tale is that crop breeders have been facing causal complexity for millennia, and from a molecular point of view for decades. Their experience should be instructive for the attitudes and expectations we have for genomewide association studies (GWAS) and other 'personalized genomic medicine.' To develop useful crop traits means to select individual plants that have a desired trait that is genetic--that is, that is known to be transmitted from parent to seed, and to replicate the trait (at least, under the highly controlled, standardized kinds of conditions in which agricultural crops are grown). For this to work, one needs to be able to breed, cross, or inter-breed seeds conferring desired traits to proliferate those into a constrained strain-specific gene pool. Traditionally, this requires generations of breeding, and selection of seed from desired plants, repeated for many generations.

The idea of molecular breeding is to use genome-spanning sets of genetic markers--the same kinds of data that human geneticists use in GWAS--to identify regions of the genome that differ between plants with desirable, and those with less desirable, versions of a desired trait. If the regions of the genome that are responsible can be identified, it is easier to pick plants with the desired genotype and remove some of the 'noise' introduced by the kind of purely empirical choice during breeding that farmers have done for millennia.

Relating phenotype to genotype in this way, to identify contributing regions of the genome and select for them specifically is in a sense like personalized genome-based prediction. As discussed in the article, 'Biotech without foreign genes', by Paul Voosen in The Land Report (which, unfortunately, doesn't seem to be online) molecular breeding is a way to greatly speed up the process of empirical crop improvement. What we mean by empirical is that the result uses whatever genome regions are identified, without worrying about finding the specific gene(s) in the regions that are actually responsible (this means, in technical terms, using linkage disequilibrium between observed 'marker' genotype, and the actual causal gene).

For crops with small genomes, like rice, breeders have been more readily able to identify specific genes responsible for desired traits. But for others, the large size of the genome has yielded much more subtle and complex control that is not dominated by a few clearly identifiable genes. Sound familiar? If so, then we should be able to learn from what breeders have experienced, as it may apply to the problem of human genomic medicine and public health.

We'll discuss that in our next post.

|

| Minnesota cornfield, Wikimedia Commons |

The important point for Mermaid's Tale is that crop breeders have been facing causal complexity for millennia, and from a molecular point of view for decades. Their experience should be instructive for the attitudes and expectations we have for genomewide association studies (GWAS) and other 'personalized genomic medicine.' To develop useful crop traits means to select individual plants that have a desired trait that is genetic--that is, that is known to be transmitted from parent to seed, and to replicate the trait (at least, under the highly controlled, standardized kinds of conditions in which agricultural crops are grown). For this to work, one needs to be able to breed, cross, or inter-breed seeds conferring desired traits to proliferate those into a constrained strain-specific gene pool. Traditionally, this requires generations of breeding, and selection of seed from desired plants, repeated for many generations.

The idea of molecular breeding is to use genome-spanning sets of genetic markers--the same kinds of data that human geneticists use in GWAS--to identify regions of the genome that differ between plants with desirable, and those with less desirable, versions of a desired trait. If the regions of the genome that are responsible can be identified, it is easier to pick plants with the desired genotype and remove some of the 'noise' introduced by the kind of purely empirical choice during breeding that farmers have done for millennia.

Relating phenotype to genotype in this way, to identify contributing regions of the genome and select for them specifically is in a sense like personalized genome-based prediction. As discussed in the article, 'Biotech without foreign genes', by Paul Voosen in The Land Report (which, unfortunately, doesn't seem to be online) molecular breeding is a way to greatly speed up the process of empirical crop improvement. What we mean by empirical is that the result uses whatever genome regions are identified, without worrying about finding the specific gene(s) in the regions that are actually responsible (this means, in technical terms, using linkage disequilibrium between observed 'marker' genotype, and the actual causal gene).

For crops with small genomes, like rice, breeders have been more readily able to identify specific genes responsible for desired traits. But for others, the large size of the genome has yielded much more subtle and complex control that is not dominated by a few clearly identifiable genes. Sound familiar? If so, then we should be able to learn from what breeders have experienced, as it may apply to the problem of human genomic medicine and public health.

We'll discuss that in our next post.

Monday, March 28, 2011

Awareness and evolution

By

Ken Weiss

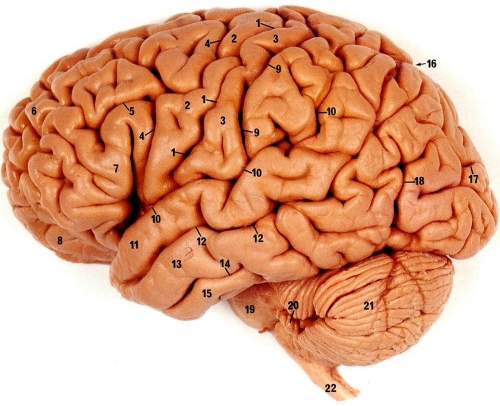

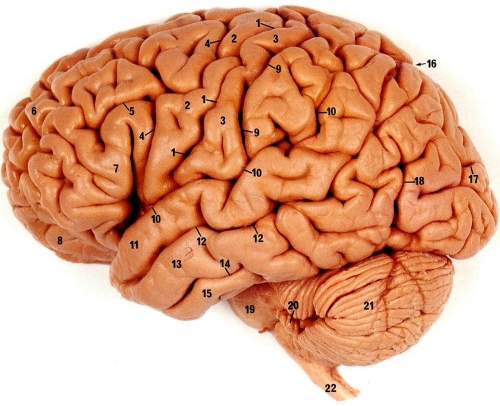

We wrote last week about the question of the nature of 'awareness' or 'self' in Nature (here and here). We know our own consciousness, but really not much about that of other organisms--indeed, not really and truly about other individuals than ourselves.

Studying the 'neural correlates' or behavioral indications of consciousness can get us only so far, mainly descriptive phenomenology. But either religions are right, and there really is something we call the 'soul' that is separate from our biology, or our consciousness is somehow the result of our genomes. That is naturally one of the biggest prizes for research to try to understand.

But consciousness or other kinds of awareness--self-awareness or otherwise--would seem to be so far removed from the direct level of the genome, so dependent on inordinately complex interactions of components, that it is difficult to see how genomes will ever explain it. The term for this is that consciousness is an 'emergent' trait, relative to genomes.

There's little if any doubt that we'll learn many genes that, when mutated, damage consciousness or alter states of 'awareness' (we're not talking about recreational drugs here---or are they relevant?). How can interacting proteins--what genes code for--produce the kind of self-aware monitoring of the inside and outside world? We may have to develop some very different ways of knowing, in order to know even what we mean in this kind of research.

If our basic understanding of evolution is close to the truth, if and when we eventually develop such scientific approaches, one thing that can be predicted safely is that we'll find the genes, and hence the roots, of consciousness much more widely distributed in Nature than our usual, human-centered view has led us to think. Indeed, many of the same phenomena may be found in plants--and many precedents suggest we'll find rudiments even in bacteria.

Whether we'll ever be able to ask the carrot how it 'feels' is another question.

Studying the 'neural correlates' or behavioral indications of consciousness can get us only so far, mainly descriptive phenomenology. But either religions are right, and there really is something we call the 'soul' that is separate from our biology, or our consciousness is somehow the result of our genomes. That is naturally one of the biggest prizes for research to try to understand.

But consciousness or other kinds of awareness--self-awareness or otherwise--would seem to be so far removed from the direct level of the genome, so dependent on inordinately complex interactions of components, that it is difficult to see how genomes will ever explain it. The term for this is that consciousness is an 'emergent' trait, relative to genomes.

There's little if any doubt that we'll learn many genes that, when mutated, damage consciousness or alter states of 'awareness' (we're not talking about recreational drugs here---or are they relevant?). How can interacting proteins--what genes code for--produce the kind of self-aware monitoring of the inside and outside world? We may have to develop some very different ways of knowing, in order to know even what we mean in this kind of research.

If our basic understanding of evolution is close to the truth, if and when we eventually develop such scientific approaches, one thing that can be predicted safely is that we'll find the genes, and hence the roots, of consciousness much more widely distributed in Nature than our usual, human-centered view has led us to think. Indeed, many of the same phenomena may be found in plants--and many precedents suggest we'll find rudiments even in bacteria.

Whether we'll ever be able to ask the carrot how it 'feels' is another question.

Friday, March 25, 2011

The Zen of GWAS: the sound of one hand clapping

So we've come to this: Nature is applauding the latest genomewide association study (GWAS) on schizophrenia as "welcome news" because it is "zeroing in on a gene" to explain this devastating disease whose etiology has been frustratingly elusive for so long (Hugh Piggins, "Zooming in on a Gene"). Many authors have found 'hits' by mapping, but most of them, if not perhaps all, have not been replicable. The largest recent study we know of, prominently published (Nature, 2009), estimated that hundreds of genes contribute to schizophrenia. Piggins does acknowledge that GWAS have been much criticized for explaining so little, but, he says, this one's different (well, at the very least, it'll sell more copies of Nature).

Speaking of copies, rare copy number variants (CNVs) have been found to be associated with schizophrenia and other neurodevelopmental disorders including autism. The operative word here being 'rare'. Copy number variants are generally large (1000 basepair or greater) genomic insertions or deletions, that, by definition, vary widely among individuals. They're either inherited from a parent who carries the CNV, or arise anew. When CNVs were first recognized, it was thought that they would be found to be associated with many diseases, but the most common CNVs seem to not be disease-related at all. After all, genomes evolve largely by segment duplication.

So given how Nature touts this result, we thought we must have misread, surely. But, no, the paper confirms:

But the authors go on to discuss the gene (VIPR2) at the identified chromosomal locus that they think might be causative, and conclude, in what may be the Oversell of the Century to date:

You may not have heard of this infamous gene, so for your edification, it's name is Vasoactive intestinal peptide receptor 2 (hence VIPR2). The ultra high plausibility of this Major Gene for--what was it? schizophrenia--is made clear by the sites in which it is expressed: the uterus, prostate, smooth muscle of the GI tract, seminal vescicles, blood vessels, and thymus. Wiki adds as an afterthought that VIPR2 is also expressed in the cerebellum (whew! A narrow escape chance for relevance?).

You may not have heard of this infamous gene, so for your edification, it's name is Vasoactive intestinal peptide receptor 2 (hence VIPR2). The ultra high plausibility of this Major Gene for--what was it? schizophrenia--is made clear by the sites in which it is expressed: the uterus, prostate, smooth muscle of the GI tract, seminal vescicles, blood vessels, and thymus. Wiki adds as an afterthought that VIPR2 is also expressed in the cerebellum (whew! A narrow escape chance for relevance?).

In fact, here's a section from GenePaint showing VIPR2 expression in a 14.5 day mouse embryo. The gene is expressed where you see the darker blue -- the snout, the vertebrae, the ribs and lungs.... Not in the brain, but then this is only one stage in development, so it's relevance to brain function can't be ruled out.

But from the evidence, targeting this for therapy might ease digestion and calm the nerves.....including those in the genitals. (So maybe schizophrenia is a sex problem, after all.)

We thought and thought what would be the right way to characterize this stunning discovery. A supernova of genetics? Darwin redux? The sting of the VIPR2? No, those images are too pedestrian. We needed to go much deeper, to something with much more profound imagery, to capture what has just been announced.

Of course, it's possible that we've missed something in the story that is far more important than our impression has been. It's always possible since we're no less fallible than the next person.

Nonetheless, based on our understanding of the story, we thought, well, Zen Buddhism is about as profound as it gets, in human thought and experience. So we decided that the clamour of this new finding, the glory of GWAS, was the roaring sound of one hand clapping. Listen very, very (very) carefully, and you, too, may be able to hear it!

Speaking of copies, rare copy number variants (CNVs) have been found to be associated with schizophrenia and other neurodevelopmental disorders including autism. The operative word here being 'rare'. Copy number variants are generally large (1000 basepair or greater) genomic insertions or deletions, that, by definition, vary widely among individuals. They're either inherited from a parent who carries the CNV, or arise anew. When CNVs were first recognized, it was thought that they would be found to be associated with many diseases, but the most common CNVs seem to not be disease-related at all. After all, genomes evolve largely by segment duplication.

So given how Nature touts this result, we thought we must have misread, surely. But, no, the paper confirms:

Here we performed a large two-stage genome-wide scan of rare CNVs and report the significant association of copy number gains at chromosome 7q36.3 with schizophrenia. Microduplications with variable breakpoints occurred within a 362-kilobase region and were detected in 29 of 8,290 (0.35%) patients versus 2 of 7,431 (0.03%) controls in the combined sample.That's 0.35%, as in 3 schizophrenics per thousand. That's a signal so weak that even a smoke alarm couldn't detect it. So, what's the real import of this finding? Nothing new at all -- schizophrenia is a complex disorder, or suite of disorders, that is multigenic, and/or has multiple different causes. Like most other complex diseases, as has been shown over and over.

But the authors go on to discuss the gene (VIPR2) at the identified chromosomal locus that they think might be causative, and conclude, in what may be the Oversell of the Century to date:

The link between VIPR2 duplications and schizophrenia may have significant implications for the development of molecular diagnostics and treatments for this disorder. Genetic testing for duplications of the 7q36 region could enable the early detection of a subtype of patients characterized by overexpression of VIPR2. Significant potential also exists for the development of therapeutics targeting this receptor. For instance, a selective antagonist of the VPAC2 receptor could have therapeutic potential in patients who carry duplications of the VIPR2 region. Peptide derivatives and small molecules have been identified that are selective VPAC2 inhibitors, and these pharmacological studies offer potential leads in the development of new drugs. Although duplications of VIPR2 account for a small percentage of patients, the rapidly growing list of rare CNVs that are implicated in schizophrenia indicates that this psychiatric disorder is, in part, a constellation of multiple rare diseases. This knowledge, along with a growing interest in the development of drugs targeting rare disorders, provides an avenue for the development of new treatments for schizophrenia.

You may not have heard of this infamous gene, so for your edification, it's name is Vasoactive intestinal peptide receptor 2 (hence VIPR2). The ultra high plausibility of this Major Gene for--what was it? schizophrenia--is made clear by the sites in which it is expressed: the uterus, prostate, smooth muscle of the GI tract, seminal vescicles, blood vessels, and thymus. Wiki adds as an afterthought that VIPR2 is also expressed in the cerebellum (whew! A narrow escape chance for relevance?).

You may not have heard of this infamous gene, so for your edification, it's name is Vasoactive intestinal peptide receptor 2 (hence VIPR2). The ultra high plausibility of this Major Gene for--what was it? schizophrenia--is made clear by the sites in which it is expressed: the uterus, prostate, smooth muscle of the GI tract, seminal vescicles, blood vessels, and thymus. Wiki adds as an afterthought that VIPR2 is also expressed in the cerebellum (whew! A narrow escape chance for relevance?).In fact, here's a section from GenePaint showing VIPR2 expression in a 14.5 day mouse embryo. The gene is expressed where you see the darker blue -- the snout, the vertebrae, the ribs and lungs.... Not in the brain, but then this is only one stage in development, so it's relevance to brain function can't be ruled out.

But from the evidence, targeting this for therapy might ease digestion and calm the nerves.....including those in the genitals. (So maybe schizophrenia is a sex problem, after all.)

We thought and thought what would be the right way to characterize this stunning discovery. A supernova of genetics? Darwin redux? The sting of the VIPR2? No, those images are too pedestrian. We needed to go much deeper, to something with much more profound imagery, to capture what has just been announced.

Of course, it's possible that we've missed something in the story that is far more important than our impression has been. It's always possible since we're no less fallible than the next person.

Nonetheless, based on our understanding of the story, we thought, well, Zen Buddhism is about as profound as it gets, in human thought and experience. So we decided that the clamour of this new finding, the glory of GWAS, was the roaring sound of one hand clapping. Listen very, very (very) carefully, and you, too, may be able to hear it!

Thursday, March 24, 2011

Chewing a bit of evolutionary cud.....

By

Ken Weiss

Yesterday we ruminated about the nature of self, and how that relates to the organism's sense of self, and to an observer's ideas about what the organism's sense of self might be. We can pontificate about what exists, or doesn't, but ultimately we are unable to go beyond what someone reports or our definition of self. If an ant is not conscious, in our sense, does it have a sense of 'self'? And if a person has consciousness, can s/he really have a sense that s/he has no self?

Speaking of rumination, let's ruminate a bit about the ruminant and its position in life. The cow or bull or steer must view the world from the position of its 'self'--whatever that means in bovine experience. As with any individual, in any species, at any time, it strives to survive, and that 'striving' is genetic in the evolutionary sense and need have no element of consciousness. So by giving lots of milk or growing tasty meat, cattle are thriving evolutionarily. Yes, beef cattle are killed, but we all die eventually, and by the subtle trick of offering themselves up to be killed (again, it's the act not the awareness that matter in evolution), cattle genes have incredible, almost unprecedented fitness!

With our inexcusable human arrogance, we'd say no, this is artificial not natural selection. From our point of view, that's true: we are choosing which bovine genes are to proliferate. But the bovine genome is fighting back, offering up genetic choices for our favoritism. More importantly, from the point of view of cow- or beef cattle-selfs, it doesn't matter what's doing the choosing: the climate, the predators, or the agronomist. However cattle got to be here, they are here, and as long as the environment (that is, McDonald's and Ben and Jerry's) stays favorable, cattledom will thrive. But from the cattle's selfness perspective, it doesn't matter whether its the breeder or the weather that leads it to be so successful. When we enter the Vegetarian Age, things may change, but so do they always for every species in changing environments.

The real difference between how cattle got here and how monarch butterflies got here is that we presume there is no conscious hand guiding 'natural' selection, whereas there is one (us) guiding 'artificial' selection.

But there are long-standing discussions about the extent to which our past evolution channels our future--'canalization' is the classical word for this, making it somewhat predictable. Evolution can only mold things in directions that viable genetic variation enables. If a species' biology and genomes are so complex that only certain kinds of genetic change is viable, then there are only so many ways it can change. That is not a conscious hand, but from the organism's viewpoint, it's not so completely different from artificial selection.

So in that sense it's we who make a distinction between our guiding hand and Nature's. It may be worth ruminating about this, just for the fun of it, because it's a kind of human (self-)exceptionalism by which we create a difference, in our own minds, about how natural change comes about. But 'in our own minds' means a distinction that is the result only of our own selfness. And we tend to deny selfness to any other species.

What would all of this look like to the proverbial Martian, whose assessment machinery may bear no resemblance to 'consciousness' and who thus may not see us as being so separate from the rest of Nature's clockworks?

|

| Cow: Wikimedia Commons |

With our inexcusable human arrogance, we'd say no, this is artificial not natural selection. From our point of view, that's true: we are choosing which bovine genes are to proliferate. But the bovine genome is fighting back, offering up genetic choices for our favoritism. More importantly, from the point of view of cow- or beef cattle-selfs, it doesn't matter what's doing the choosing: the climate, the predators, or the agronomist. However cattle got to be here, they are here, and as long as the environment (that is, McDonald's and Ben and Jerry's) stays favorable, cattledom will thrive. But from the cattle's selfness perspective, it doesn't matter whether its the breeder or the weather that leads it to be so successful. When we enter the Vegetarian Age, things may change, but so do they always for every species in changing environments.

The real difference between how cattle got here and how monarch butterflies got here is that we presume there is no conscious hand guiding 'natural' selection, whereas there is one (us) guiding 'artificial' selection.

But there are long-standing discussions about the extent to which our past evolution channels our future--'canalization' is the classical word for this, making it somewhat predictable. Evolution can only mold things in directions that viable genetic variation enables. If a species' biology and genomes are so complex that only certain kinds of genetic change is viable, then there are only so many ways it can change. That is not a conscious hand, but from the organism's viewpoint, it's not so completely different from artificial selection.

So in that sense it's we who make a distinction between our guiding hand and Nature's. It may be worth ruminating about this, just for the fun of it, because it's a kind of human (self-)exceptionalism by which we create a difference, in our own minds, about how natural change comes about. But 'in our own minds' means a distinction that is the result only of our own selfness. And we tend to deny selfness to any other species.

What would all of this look like to the proverbial Martian, whose assessment machinery may bear no resemblance to 'consciousness' and who thus may not see us as being so separate from the rest of Nature's clockworks?

Wednesday, March 23, 2011

You are who you are....aren't you?

By

Ken Weiss

Do you exist? Do we? Does that ant crawling along your window-sill? This post was occasioned, as so often is the case, by a story in the news, in this case in The Independent, by Julian Baggini who has just written a book about what it means to be self-aware and the strange experiences of people who exist but in various ways lose the sense that they exist.

In the days of classical Greece, the Solopsists were a school of philosophers who wondered whether you were all there was in existence--everything else was in your imagination. After all, how can you prove that anything, much less anybody, exists? But this is very different from somehow feeling that even you yourself don't exist, and that is hard to imagine to those of us who, well, know that we do. Or at least think we do!

In the 18th Century, Immanuel Kant discussed the problem of our knowing only what our sensory systems tell us, rather than what things actually are in themselves. He assumed that those things existed, but dealt with the consequences of our limited ability to know them directly. But again, at least the issue is what we know about what's 'out there' rather than what's 'in here'.

In modern times, there were many noteworthy attempts to grapple with the former issue. But what is 'self'? Whether we state it thus or not, it usually boils down to consciousness. And there is of course the view that only us perfect humans have it. That is very un-evolutionary thinking, since nothing so complex arises out of nothing.

Research into consciousness faces many barriers, not least of which is that it is presently impossible to objectively study something that is entirely (indeed, by definition) subjective. Going back to the founder of modern psychology, William James, to the present--including the last years of work by Francis Crick (discoverer of DNA structure)--what we do is to study what we can see or measure about the study subject. These have recently been referred to as the 'neural correlates of consciousness'.

We can ask people what they experience, but we can't ask a functioning brain that does not have conscious responsiveness how it feels about itself. But, from the kinds of work that have been done, it seems unlikely that people whose brains have been damaged, yet who can respond to the world but do not have 'consciousness' (including the 'split-brain' subjects, whose hemispheres have been surgically separated to treat severe epilepsy)--it seems unlikely they can tell us that they do not exist.

As a result, whatever this story is actually reporting, probably no reader of this post can directly report experiencing non-existence, so we can only think about what those who say they do have that experience could possibly mean. Or consider the carrot: it's a complex, organized living structure, presumably without consciousness....but does it have self-awareness?

Baggini concludes

People with Cotard's syndrome, for instance, can think that they don't exist, an impossibility for Descartes. Broks describes it as a kind of "nihilistic delusion" in which they "have no sense of being alive in the moment, but they'll give you their life history". They think, but they do not have sense that therefore they are.

Then there is temporal lobe epilepsy, which can give sufferers an experience called transient epileptic amnesia. "The world around them stays just as real and vivid – in fact, even more vivid sometimes – but they have no sense of who they are," Broks explains. This reminds me of Georg Lichtenberg's correction of Descartes, who he claims was entitled to deduce from "I think" only the conclusion that "there is thought". This is precisely how it can seem to people with temporal lobe epilepsy: there is thought, but they have no idea whose thought it is.

You don't need to have a serious neural pathology to experience the separation of sense of self and conscious experience. Millions of people have claimed to get this feeling from meditation, and many thousands more from ingesting certain drugs.

In the days of classical Greece, the Solopsists were a school of philosophers who wondered whether you were all there was in existence--everything else was in your imagination. After all, how can you prove that anything, much less anybody, exists? But this is very different from somehow feeling that even you yourself don't exist, and that is hard to imagine to those of us who, well, know that we do. Or at least think we do!

In the 18th Century, Immanuel Kant discussed the problem of our knowing only what our sensory systems tell us, rather than what things actually are in themselves. He assumed that those things existed, but dealt with the consequences of our limited ability to know them directly. But again, at least the issue is what we know about what's 'out there' rather than what's 'in here'.

In modern times, there were many noteworthy attempts to grapple with the former issue. But what is 'self'? Whether we state it thus or not, it usually boils down to consciousness. And there is of course the view that only us perfect humans have it. That is very un-evolutionary thinking, since nothing so complex arises out of nothing.

Research into consciousness faces many barriers, not least of which is that it is presently impossible to objectively study something that is entirely (indeed, by definition) subjective. Going back to the founder of modern psychology, William James, to the present--including the last years of work by Francis Crick (discoverer of DNA structure)--what we do is to study what we can see or measure about the study subject. These have recently been referred to as the 'neural correlates of consciousness'.

We can ask people what they experience, but we can't ask a functioning brain that does not have conscious responsiveness how it feels about itself. But, from the kinds of work that have been done, it seems unlikely that people whose brains have been damaged, yet who can respond to the world but do not have 'consciousness' (including the 'split-brain' subjects, whose hemispheres have been surgically separated to treat severe epilepsy)--it seems unlikely they can tell us that they do not exist.

As a result, whatever this story is actually reporting, probably no reader of this post can directly report experiencing non-existence, so we can only think about what those who say they do have that experience could possibly mean. Or consider the carrot: it's a complex, organized living structure, presumably without consciousness....but does it have self-awareness?

Baggini concludes

Neuroscience and psychology provide plenty of data to support the view that common sense is wrong when it thinks that the "I" is a separate entity from the thoughts and experiences it has. But it does not therefore show that this "I" is just an illusion. There is what I call an Ego Trick, but it is not that the self doesn't exist, only that it is not what we generally assume it to be.This is a mind-bender, a philosophical as much as scientific, phenomenon. Are you you....or aren't you?

Tuesday, March 22, 2011

Universities: education vs training, in their 1000 year history

The subject of the March 17th BBC4 radio program, In Our Time, was medieval universities.

The first universities were international -- people came from all over Christendom, particularly Paris because there, schools were supported by competing ecclesiastical authorities. The freedom for the student or teacher to move to another school if he fell out with one was attractive. And, in a city like Paris, the infrastructure was conducive to being a student, because of the ease with which students could find living quarters or jobs.

Teaching in the early universities was entirely in Latin and students all first had common grounding in the arts, and this meant that specialists could keep talking to each other in a common language with a common eductional foundation even after their scholarly paths diverged. In fact, students would follow the same course for their first several years in any of the early 6 or 7 universities.

Information came from very old texts. Geometry went back to Euclid, music back to harmonies discussed by Greeks, philosophy was all Aristotle. The result was that students all spoke the same language.

Even the early universities were very expensive -- either wealthy families paid, or the Church paid, or students worked. Universities were originally simply rented rooms, and could be nomadic -- if things went poorly in one city, the university could move. Cambridge, for example, was founded by a migration from Oxford. And, perhaps with online universities we are reviving the model of universities without a fixed home as now a student can pick and choose his or her courses from all over the world.

University infrastructure developed over time. The British model of colleges and residential halls gave students more opportunities to study, and served in some senses to tame students, who were all male, and who came to school at age 14 or 15 with things other than books on their minds. Living in college, with a master who was also a moral tutor, meant that these boys led somewhat less raucous lives than they had when they were free to wander the city.

The growth of the monied economy in the 11th and 12th centuries was a crucial factor in the development of universities. A young man couldn't barter a flock of sheep for an education. And, universities generated a degree of social mobility. Poor students could get a scholarship from the local church and become upwardly mobile once he got his education. Also, colleges were set up as charitable foundations, so talented poor students were able to continue their studies.

Of course, before the universities as we know them now, there were many schools and very high intellectual achievement in the Islamic caliphates. Before that, there were major institutions of learning such as the libraries like the major one at Alexandria, and even earlier of course were the Academy and Lycaeum of Aristotle and Plato, and presumably many others (that we personally don't know about). And we are writing here only of the western tradition.

While modern universities still follow the medieval model to a large extent, what has changed, at least to some extent, particularly in the 'research' universities, is the diminution of the role of teaching to the stress on research at the expense of students (though of course that is de facto, not a formalized role, though we all know its truth). Of course, most research at any given time is not worth much, because great ideas and discoveries come hard, but research has become a bauble of success as universities have become more middle-class and less elite, and as education becomes more vocational than acculturation of the privileged in the narrow skills and talents that were important to their strata of society in medieval times.

Eventually, it is likely that there will be a return to education, as research may become too expensive with too little payoff. There may also be a return to the more broad general ('liberal arts') kind of education to replace hyper-focused technical training. But only time will tell how much such change will occur, or when, or what else may happen instead.

In the 11th and 12th centuries a new type of institution started to appear in the major cities of Europe. The first universities were those of Bologna and Paris; within a hundred years similar educational organisations were springing up all over the continent. The first universities based their studies on the liberal arts curriculum, a mix of seven separate disciplines derived from the educational theories of Ancient Greece.

The universities provided training for those intending to embark on careers in the Church, the law and education. They provided a new focus for intellectual life in Europe, and exerted a significant influence on society around them. And the university model proved so robust that many of these institutions and their medieval innovations still exist today.

Before universities, education was provided by monastic schools. But population growth and increasing urbanization in Europe led to the increase in demand for a more sophisticated, professional theology, law and medicine, and the response was the institutionalization of these subjects in universities something that has not changed in 1000 years.

|

| Map of medieval universities in Europe, public domain |

Standards were set for degrees, and degrees became sought after marks of erudition, though perhaps with a dual purpose. Before universities, students competed with their teachers for their teacher's students, while after universities were established, students instead began to compete amongst each other for jobs. This was because masters could control their students by refusing to grant their degree if they were misbehaving.

Even so, in the beginning many people went to the university and did not graduate, and that was perfectly fine. They would get the fundamentals and then go on to a job as a teacher, a scribe and so on. Graduation was for those who wanted a high-powered job in the administration of the church or state. Now, of course, our society is much more credential-oriented. Many professional jobs require degrees, even while many people with degrees find it harder and harder to get the jobs for which they are credentialed. (Indeed, a piece in the New York Times last week asks whether the astronomical cost of getting an education, especially an elite education, is worth it any longer, pointing out that many of the world's wealthiest didn't earn a college degree.)

Teaching in the early universities was entirely in Latin and students all first had common grounding in the arts, and this meant that specialists could keep talking to each other in a common language with a common eductional foundation even after their scholarly paths diverged. In fact, students would follow the same course for their first several years in any of the early 6 or 7 universities.

Information came from very old texts. Geometry went back to Euclid, music back to harmonies discussed by Greeks, philosophy was all Aristotle. The result was that students all spoke the same language.

Even the early universities were very expensive -- either wealthy families paid, or the Church paid, or students worked. Universities were originally simply rented rooms, and could be nomadic -- if things went poorly in one city, the university could move. Cambridge, for example, was founded by a migration from Oxford. And, perhaps with online universities we are reviving the model of universities without a fixed home as now a student can pick and choose his or her courses from all over the world.

University infrastructure developed over time. The British model of colleges and residential halls gave students more opportunities to study, and served in some senses to tame students, who were all male, and who came to school at age 14 or 15 with things other than books on their minds. Living in college, with a master who was also a moral tutor, meant that these boys led somewhat less raucous lives than they had when they were free to wander the city.

The growth of the monied economy in the 11th and 12th centuries was a crucial factor in the development of universities. A young man couldn't barter a flock of sheep for an education. And, universities generated a degree of social mobility. Poor students could get a scholarship from the local church and become upwardly mobile once he got his education. Also, colleges were set up as charitable foundations, so talented poor students were able to continue their studies.

Of course, before the universities as we know them now, there were many schools and very high intellectual achievement in the Islamic caliphates. Before that, there were major institutions of learning such as the libraries like the major one at Alexandria, and even earlier of course were the Academy and Lycaeum of Aristotle and Plato, and presumably many others (that we personally don't know about). And we are writing here only of the western tradition.

While modern universities still follow the medieval model to a large extent, what has changed, at least to some extent, particularly in the 'research' universities, is the diminution of the role of teaching to the stress on research at the expense of students (though of course that is de facto, not a formalized role, though we all know its truth). Of course, most research at any given time is not worth much, because great ideas and discoveries come hard, but research has become a bauble of success as universities have become more middle-class and less elite, and as education becomes more vocational than acculturation of the privileged in the narrow skills and talents that were important to their strata of society in medieval times.

Eventually, it is likely that there will be a return to education, as research may become too expensive with too little payoff. There may also be a return to the more broad general ('liberal arts') kind of education to replace hyper-focused technical training. But only time will tell how much such change will occur, or when, or what else may happen instead.

Monday, March 21, 2011

Honoring Masatoshi Nei

By

Ken Weiss

There was a special meeting here at Penn State this weekend. It was a gathering to honor the distinguished, perhaps nearly legendary, Masatoshi Nei on his recent 80th birthday. Nei's work has been on molecular evolutionary genetics as it applies in particular to the evolution of DNA sequence. A rather incredible array of distinguished people, including Prof. Nei's many distinguished former students, and other distinguished population geneticists were in attendance.

Many interesting papers were given, but in particular we wanted to mention some work presented by Michael Lynch. He has been stressing the role of chance (drift) in the assembly of genomes by evolutionary processes. In particular, he has shown that much of our genome has not been the result of strong, highly focused natural selection as is the widespread mythology about evolution. Instead, or in addition to natural selection, many variants that are not the most 'fit' in their time, can still advance even to fixation, replacing other variants at their respective locus. This can happen, for example, if their fitness 'deficit' is quite small relative to the most fit variant in the population.

Mike Lynch's talk here concerned the way in which the mutation rate was molded in the ancestry of species living today. The main idea is that if mutations, or mutation rates, are only slightly deleterious, then selection is rather powerless to mold them. Much that happens again depends on drift. There are regular relationships between mutational rates, the amount of genetic variation, and genome and population sizes (the latter affects the power of drift--chance--in determining what survives or raises in frequency and what doesn't). If you're interested in this, his book ("The Origins of Genome Architecture") and a recent paper in Trends in Genetics (2010) are very much worth reading.

The point of mentioning this here on Mermaid's Tale is that the idea that fine-tuning by natural selection is the, or even the major, factor in evolution is exaggerated, as we've often said. Every species here today is the result of a 4-billion-year successful ancestry, so we're all 'adapted' to what we do (if not, we're headed for extinction). Selection removes what really doesn't work. But evolution is very slow generally and the evidence strongly suggests that there are many ways to be successful enough, and that is good enough to proliferate. While there are many instances of what appear to be finely selected, exotically specialized adaptations (and Darwin wrote about many of them!), that is not the whole story (and perhaps not the main story?) of evolution.

Many interesting papers were given, but in particular we wanted to mention some work presented by Michael Lynch. He has been stressing the role of chance (drift) in the assembly of genomes by evolutionary processes. In particular, he has shown that much of our genome has not been the result of strong, highly focused natural selection as is the widespread mythology about evolution. Instead, or in addition to natural selection, many variants that are not the most 'fit' in their time, can still advance even to fixation, replacing other variants at their respective locus. This can happen, for example, if their fitness 'deficit' is quite small relative to the most fit variant in the population.

Mike Lynch's talk here concerned the way in which the mutation rate was molded in the ancestry of species living today. The main idea is that if mutations, or mutation rates, are only slightly deleterious, then selection is rather powerless to mold them. Much that happens again depends on drift. There are regular relationships between mutational rates, the amount of genetic variation, and genome and population sizes (the latter affects the power of drift--chance--in determining what survives or raises in frequency and what doesn't). If you're interested in this, his book ("The Origins of Genome Architecture") and a recent paper in Trends in Genetics (2010) are very much worth reading.

The point of mentioning this here on Mermaid's Tale is that the idea that fine-tuning by natural selection is the, or even the major, factor in evolution is exaggerated, as we've often said. Every species here today is the result of a 4-billion-year successful ancestry, so we're all 'adapted' to what we do (if not, we're headed for extinction). Selection removes what really doesn't work. But evolution is very slow generally and the evidence strongly suggests that there are many ways to be successful enough, and that is good enough to proliferate. While there are many instances of what appear to be finely selected, exotically specialized adaptations (and Darwin wrote about many of them!), that is not the whole story (and perhaps not the main story?) of evolution.

Friday, March 18, 2011

Today's hot health advice: Don't be rash, or saucy!

By

Ken Weiss

Well, in the latest issue of the Annals of Hyperprecision, we find the sage advice that to 'reduce' the risk of bowel cancer, you should not eat more than 70g of red meat per day. That, we're told, means no more than 3 rashers of bacon or 2 measly sausages (but not both!).

Now the study reported more precisely that

And of course 'two sausages' could mean the modest breakfast links shown above, or (preferably) the Cumberlands shown below--the report was British, after all, and it seems just what you'd expect as public advice from a government committee!

We would not want to undermine public confidence in their government, and certainly not to trivialize the risk of the raw stuff for your intestinal health. There are plenty of reasons to avoid sausage (without even thinking of the kind of meat that goes into them, nor the environmental cost of meat vs plant food). Colorectal cancers are complex, and dietary intake estimates notoriously imprecise (despite what committee reports may suggest, which is why researchers want to photograph every bite their study subjects eat). Further, the report discusses whether cutting back in this way on meat consumption will lead to diseases due to iron deficiency.

Damn, this world is complicated! Reports like this are an occasion to reflect beyond today's lunch, and what would go good with mash if we have to shun the links, and to consider the nature of scientific evidence in the observational setting where data are imprecise and hard to come by, assumptions many, and risk decisions complex.

If all we can realistically care too carefully about is keeping to a sensible level of the inevitable risks we face in life, then perhaps the oldest medical advice is still the best (and relevant to many other areas of the life sciences as well: moderation in all things.

Well, take heart all! The latest issue of the Annals also has some compensatory advice: moderate alcohol is good for you! Just don't mix your liquid lunch with a BLT.

Now the study reported more precisely that

Eating 100 to 120g of red and processed meat a day - things like salami, ham and sausages - increases the risk of developing the condition by 20 to 30%, according to studies.Although very depressing for us bacophiles, at first glance this advice has all the panache of scientific soundness, but less so when you think seriously about it. The values seem very precise and while we haven't seen the original paper, we presume this was a regression analysis of cases of colorectal cancer per 100,000 per year vs mean grams of the raw Alley Oop diet per day. The cutoff of 70g and the vague quoted values are of course subjective judgments. And these would properly have to be based on some sort of statistical significance test--which entails issues about the size and nature of the sample, measurements, and other assumptions.

And of course 'two sausages' could mean the modest breakfast links shown above, or (preferably) the Cumberlands shown below--the report was British, after all, and it seems just what you'd expect as public advice from a government committee!

We would not want to undermine public confidence in their government, and certainly not to trivialize the risk of the raw stuff for your intestinal health. There are plenty of reasons to avoid sausage (without even thinking of the kind of meat that goes into them, nor the environmental cost of meat vs plant food). Colorectal cancers are complex, and dietary intake estimates notoriously imprecise (despite what committee reports may suggest, which is why researchers want to photograph every bite their study subjects eat). Further, the report discusses whether cutting back in this way on meat consumption will lead to diseases due to iron deficiency.

Damn, this world is complicated! Reports like this are an occasion to reflect beyond today's lunch, and what would go good with mash if we have to shun the links, and to consider the nature of scientific evidence in the observational setting where data are imprecise and hard to come by, assumptions many, and risk decisions complex.

If all we can realistically care too carefully about is keeping to a sensible level of the inevitable risks we face in life, then perhaps the oldest medical advice is still the best (and relevant to many other areas of the life sciences as well: moderation in all things.

Well, take heart all! The latest issue of the Annals also has some compensatory advice: moderate alcohol is good for you! Just don't mix your liquid lunch with a BLT.

Thursday, March 17, 2011

An army of ants

By

Ken Weiss

We've posted many times over our views on what we believe are the excesses, and even disingenuous self-promotion, of the atOmic bombs that are becoming so predominant in the science landscapes: too many projects promulgated on a prevailing, perhaps ephemeral, view that we must use high and costly technology to measure absolutely everything on absolutely everyone (GWAS, biobanks, proteomics, connectomics, exposomics,....). You can search MT for those posts.

It isn't that science is bad, it's that it has become a system for capturing funds as much as for solving the nominal problems on which the largess is provided. We raise various objections, but of course we are fully aware that we and others who attempt to raise these issues are ants relative to the elephants, and a small army of ants at that. It might be debatable how much merit there is in our views, but there is no debating how much leverage those views have: none! The elephants, those with strong vested interests, certainly and inevitably trample the ants. Only something like a true budget crunch (not the proposed 5% cut in NIH funding that has generated so much loud bleating by the research interests), might force a major change. At least at first, such a budget cut will only intensify the competition among the elephants and the trampling of ants. More likely, things will evolve as people get bored with lack of definitive results and move on to other things. Whether there will be an atOmics meltdown is unpredictable, but in our environment, it won't movie things in a more modest direction--in claims or costs.

As a general policy, there is a way to rectify the situation, up to a point at least. It is to mandate that studies can only be of some maximal size, no bigger. Small samples are weak samples. They can't detect everything. But what they can detect is what's clear and most important! They leave the chaff behind.

If we weren't endlessly detonating atOmic bombs, scattering so much statistical debris across the landscape that we can't see if anything remains standing, what small-size studies do is tell you clearly and replicably what really matters. Restraint focuses the mind.

And those are the topics--for example, major disease-related genes--that can be addressed by standard scientific methods and, hopefully, problems that can actually be solved (if science establishments actually want to solve problems rather than ensure that they remain unsolved and still fundable). And once they are solved, what remains will then perhaps become more easily detectable and/or worth studying.

If we did this, we'd have more, if smaller, grants to go around, to more people, younger people not yet irradiated by atOmic bombs, who might have cleverer or more cogent ideas. And where complexity matters, but its individual components can't be identified in the usual way, it will focus these younger and fresher minds on better ways to understand genetic causal complexity, and the evolution that brings it about.

Yes, we are but ants, and we know very well that it's not going to happen this way.

It isn't that science is bad, it's that it has become a system for capturing funds as much as for solving the nominal problems on which the largess is provided. We raise various objections, but of course we are fully aware that we and others who attempt to raise these issues are ants relative to the elephants, and a small army of ants at that. It might be debatable how much merit there is in our views, but there is no debating how much leverage those views have: none! The elephants, those with strong vested interests, certainly and inevitably trample the ants. Only something like a true budget crunch (not the proposed 5% cut in NIH funding that has generated so much loud bleating by the research interests), might force a major change. At least at first, such a budget cut will only intensify the competition among the elephants and the trampling of ants. More likely, things will evolve as people get bored with lack of definitive results and move on to other things. Whether there will be an atOmics meltdown is unpredictable, but in our environment, it won't movie things in a more modest direction--in claims or costs.

As a general policy, there is a way to rectify the situation, up to a point at least. It is to mandate that studies can only be of some maximal size, no bigger. Small samples are weak samples. They can't detect everything. But what they can detect is what's clear and most important! They leave the chaff behind.

If we weren't endlessly detonating atOmic bombs, scattering so much statistical debris across the landscape that we can't see if anything remains standing, what small-size studies do is tell you clearly and replicably what really matters. Restraint focuses the mind.

And those are the topics--for example, major disease-related genes--that can be addressed by standard scientific methods and, hopefully, problems that can actually be solved (if science establishments actually want to solve problems rather than ensure that they remain unsolved and still fundable). And once they are solved, what remains will then perhaps become more easily detectable and/or worth studying.

If we did this, we'd have more, if smaller, grants to go around, to more people, younger people not yet irradiated by atOmic bombs, who might have cleverer or more cogent ideas. And where complexity matters, but its individual components can't be identified in the usual way, it will focus these younger and fresher minds on better ways to understand genetic causal complexity, and the evolution that brings it about.

Yes, we are but ants, and we know very well that it's not going to happen this way.

Wednesday, March 16, 2011

Japan's tragedy: the genetics of radiation exposure

By

Ken Weiss

The Japanese are undergoing severe trauma in many ways and, cruelly, Nature may have delivered some blows below the belt. Despite being the acknowledged best in the world at designing and building nuclear power plants, Nature's wrath exceeded anything they could have anticipated. The earthquake and tsunami were the beginning of a terrible 'perfect storm' of events.

The detonations of atomic bombs over Hiroshima and Nagasaki, now nearly 70 years ago, had a curious impact on both the survivors and the rest of the world. At the time, radiation was a known mutagen, and everyone was concerned about the mutagenic effects of fallout, therapeutic radiation, and industrial exposures (as in uranium miners). The fear was that humans exposed to radiation would suffer mutations but since, according to evolutionary theory of the time, many of them would be recessive, they would be transmitted from generation to generation. In our huge societies, two carriers of the same mutation would be very unlikely to mate. But if we continued to accumulate harmful mutations, eventually there could be a very big genetic burden on society, as a frightening fraction of fetuses could be affected.

Well, that turned out not to be much of concern, for many reasons. Most directly, there was no elevated frequency of protein-coding mutations detected in studies of offspring of survivors of the bombs in Japan. This was compared to people who were unexposed in Japan, to different dose levels, and even to the Yanomami Indians of the Amazon basin (a main reason the South American studies were funded at the time).

Unfortunately, radiation didn't get a clean bill of health. Instead, about 5 years after the bombings, increased levels of leukemia were found in the Japanese survivors. And if all cancers are considered, the increase persisted for decades--essentially a life-long excess risk. This is the long tail of ionizing radiation damage.

Hopefully, the wind will carry the radiation released this week in Japan out to sea, where it will disperse and be of no concern. If it does hover over a city, those who do not experience very serious exposure will have to be followed, perhaps for the rest of their lives, in an attempt at early detection of radiation-induced tumors. To do this effectively, estimates of each individual's exposure will undoubtedly be attempted--based on where they were when, indoors or outdoors, and so on.

Radiation is a mutagen, but the type of risk depends on the type of exposure. WWII exposure in Japan was mainly a quick burst of gamma radiation passing through the body, and causing mutation in cells along its path. In the current case most of the dosage will be inhaled or on the skin. It could persist (e.g., inhaled isotopes could lodge in mucous membranes as they decayed over the years). Much of the externally deposited radiation probably won't pierce the skin, but isotopes breathed in or ingested could be a problem for exposed organ parts (like lining of the lungs). That could make detection somewhat easier as fewer tissues may be at risk, though it doesn't make the resulting disease less serious. But all of these mutations are in somatic, not germline tissues. So although this isn't of much comfort now, this probably means, again, little damage to the gametes and hence little introduction of new mutation into the Japanese gene pool.

We hope this is just a bit of speculation, and that successful control and friendly winds will blow this problem away, and leave the people of Japan safe--at least from radiation-induced disease. They already have plenty of problems to deal with.

The detonations of atomic bombs over Hiroshima and Nagasaki, now nearly 70 years ago, had a curious impact on both the survivors and the rest of the world. At the time, radiation was a known mutagen, and everyone was concerned about the mutagenic effects of fallout, therapeutic radiation, and industrial exposures (as in uranium miners). The fear was that humans exposed to radiation would suffer mutations but since, according to evolutionary theory of the time, many of them would be recessive, they would be transmitted from generation to generation. In our huge societies, two carriers of the same mutation would be very unlikely to mate. But if we continued to accumulate harmful mutations, eventually there could be a very big genetic burden on society, as a frightening fraction of fetuses could be affected.

Well, that turned out not to be much of concern, for many reasons. Most directly, there was no elevated frequency of protein-coding mutations detected in studies of offspring of survivors of the bombs in Japan. This was compared to people who were unexposed in Japan, to different dose levels, and even to the Yanomami Indians of the Amazon basin (a main reason the South American studies were funded at the time).

Unfortunately, radiation didn't get a clean bill of health. Instead, about 5 years after the bombings, increased levels of leukemia were found in the Japanese survivors. And if all cancers are considered, the increase persisted for decades--essentially a life-long excess risk. This is the long tail of ionizing radiation damage.

Hopefully, the wind will carry the radiation released this week in Japan out to sea, where it will disperse and be of no concern. If it does hover over a city, those who do not experience very serious exposure will have to be followed, perhaps for the rest of their lives, in an attempt at early detection of radiation-induced tumors. To do this effectively, estimates of each individual's exposure will undoubtedly be attempted--based on where they were when, indoors or outdoors, and so on.

Radiation is a mutagen, but the type of risk depends on the type of exposure. WWII exposure in Japan was mainly a quick burst of gamma radiation passing through the body, and causing mutation in cells along its path. In the current case most of the dosage will be inhaled or on the skin. It could persist (e.g., inhaled isotopes could lodge in mucous membranes as they decayed over the years). Much of the externally deposited radiation probably won't pierce the skin, but isotopes breathed in or ingested could be a problem for exposed organ parts (like lining of the lungs). That could make detection somewhat easier as fewer tissues may be at risk, though it doesn't make the resulting disease less serious. But all of these mutations are in somatic, not germline tissues. So although this isn't of much comfort now, this probably means, again, little damage to the gametes and hence little introduction of new mutation into the Japanese gene pool.

We hope this is just a bit of speculation, and that successful control and friendly winds will blow this problem away, and leave the people of Japan safe--at least from radiation-induced disease. They already have plenty of problems to deal with.

Tuesday, March 15, 2011

Science without hypotheses? Not exactly penis-spine tingling

I’m still a bit bewildered after attending an afternoon of talks at the American Academy of Forensic Sciences (AAFS) conference held in Chicago a few weeks ago.

For one, I was stunned by the practical use of “mongoloid,” “caucasoid,” and “negroid” which I thought were long ago shelved as artifacts of physical anthropology’s racist past. But, whatever.

There was another surreal aspect to the meetings. I attended five talks and not one had a hypothesis. Based on these few talks, the conventional format appeared to be: Show photos of dead things. For example, even if the stated aim is to find anatomical indicators or predictors of intentional animal abuse, it was clear that one need not provide a single hypothesis and that one can conclude a slide show of dog strangulations and gunshot deaths with a simple, “animal abuse happens.” And if you thought it was impossible to make a disappointing presentation about a tiger attack, you’d have been as surprised as I was.